Shared services vs. cross-functional teams – a flow perspective

In the agile world, there is often debate about whether shared services or cross-functional teams are the better choice. From the perspective of flow metrics and value stream optimisation, there are clear scenarios in which shared services offer advantages and situations in which integrated teams are superior. When does centralised expertise make sense and when do integrated teams ensure better flow?

The answer to this question is not only a methodological decision, but also a strategic one. It affects how quickly organisations can respond to change, how transparent their processes are and how efficiently resources are used. Both models have their merits, but their success depends heavily on the context – for example, the type of work, the frequency of special requirements or the maturity of the organisation in terms of collaboration and self-organisation.

This assessment is based on insights gained from flow analysis and the evolutionary change management approach in the Kanban environment. Metrics such as work in progress (WIP), cycle time and throughput are used to recognise, based on facts, which structure brings the greatest added value in each specific case. The aim is to design the value stream in such a way that waiting times are minimised, risks are controlled and capacities are optimally utilised.

Cross-functional teams: When integration is prioritised

Immediate availability and reduced coordination costs

Cross-functional teams excel when fast feedback cycles and minimal waiting times are crucial. According to Dan Vacanti, integrated skills shorten cycle times by eliminating dependencies. A Scrum team with its own UX designer and tester delivers features faster than if it had to wait for external services.

The immediate availability of skills directly within the team not only reduces waiting times but also the amount of communication required. Decisions can be made immediately, tests can be carried out promptly and adjustments can be implemented straight away. This reduces the risk of requirements being watered down or misunderstood as they pass between different departments.

Example: An e-commerce team with its own front-end development, back-end development, UX design and testing can design, develop, test and roll out a new checkout function within a few days. If parts of this work were outsourced to shared services, waiting times for feedback or capacity could delay the entire process by weeks.

Cultural factors and trust

In organisational units with a high level of trust, cross-functional teams enable autonomous decisions without having to wait for external approvals. The Kanban principles emphasise that teams with their own process sovereignty can respond more quickly and specifically to customer needs.

Autonomy also promotes motivation: those who are allowed to make decisions independently identify more strongly with the results.

At the same time, the team develops a broader understanding of the entire product lifecycle, as everyone involved can see the impact of their work on the end user.

Example: In a software-as-a-service company, a cross-functional product team can test new features directly with selected customers, incorporate feedback and roll them out again without having to rely on formal handovers to other departments.

However, in conservative organisations with strict compliance requirements, shared services often remain necessary, for example to minimise legal risks or ensure binding standards. In such cases, hybrid models can be useful, in which the team works autonomously but draws on central expertise for certain review steps.

Flow metrics as a basis for decision-making

Work in progress (WIP) and cycle time

An overloaded shared service is visible in the cumulative flow diagram (CFD) as growing WIP bands. The wider the gap between the ‘start’ line and the ‘end’ line, the longer the average throughput time. This means that tasks wait longer before they are completed, which is a clear sign of a bottleneck.

Why this is important:

Growing WIP bands indicate that too many tasks are being processed at the same time.

A high WIP value almost always leads to longer cycle times because tasks compete for attention and context switching is constant.

In shared services in particular, such bottlenecks can quickly delay the entire value stream if many teams depend on the same central unit.

Example: A central UX team handles requests from five different product areas. When each area submits several new designs, the WIP grows rapidly. Without limits, teams have to wait several weeks for their designs to be finalised, even if the actual design work only takes a few days.

Practical approach: WIP limits at the service level allow you to control how many tasks are in the system at any given time. This forces prioritisation and prevents too many half-finished tasks from blocking the flow.

Throughput and variability

Shared services should make their throughput transparent, i.e. how many tasks are actually completed in a given period of time. Cycle time scatter plots help to recognise both the average and the variation in throughput times.

Why this is important:

Low variation (e.g. less than 20%) means that tasks are completed with a high degree of predictability. This is ideal for plannable, recurring activities such as compliance checks or standard deployments.

High variation indicates that some tasks take significantly longer than others. This can be a sign of a lack of standardisation, unclear requirements or frequent interruptions.

Example: A shared service team for database administration completes an average of ten migrations per month. If the variation is ± 2 days, product teams can plan reliably. If it is ± 10 days, planning is much more difficult, indicating that processes should be optimised or tasks structured differently.

Comparison with cross-functional teams: In innovative projects with a high degree of uncertainty, such as when requirements often arise spontaneously, cross-functional teams can react more flexibly because they have the necessary skills directly within the team and do not have to adapt to the throughput rate of a shared service.

Practical recommendations

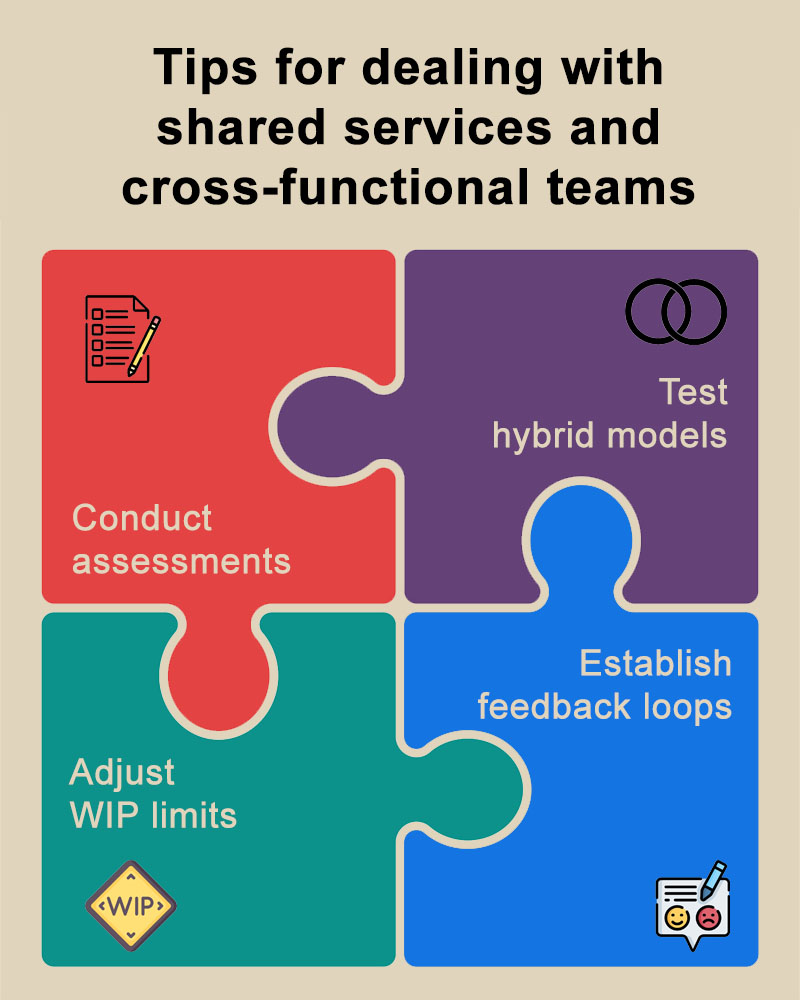

Conduct assessments

Measure the transaction costs for shared service requests. This includes not only direct processing times, but also waiting times, coordination rounds and the time teams have to wait for feedback. Also analyse how heavily specialists are utilised and how often immediate availability is required in the value stream.

Practical tip

- Conduct a value stream analysis to identify dependencies and bottlenecks.

- Use metrics such as cycle time, WIP and throughput to quantify the current situation.

- Document typical process variants to see which tasks can be standardised and which cannot.

Test hybrid models

Use shared services for clearly defined, rarely needed or highly specialised tasks (e.g. compliance, infrastructure), but integrate frequently needed skills such as testing, UX or DevOps expertise directly into the teams. This allows you to benefit from both specialisation and rapid responsiveness.

Practical tip:

- Start with a pilot project in which a previously central skill is integrated into the team on a trial basis.

- Observe the impact on throughput times and quality.

- Maintain service agreements with central units so that you can draw on additional capacity at short notice if demand increases.

Adjust WIP limits

Limit the number of parallel requests to shared services to avoid overload. This can be implemented with Kanban WIP limits per service channel. This prevents urgent tasks from getting stuck in a queue behind many less important requests.

Practical tip:

- Set separate limits for standard tasks and for urgent expedited cases.

- Communicate these limits openly so that all stakeholders understand the consequences.

- Use visualisation (Kanban boards, CFD) to make bottlenecks transparent.

Establish feedback loops

Hold regular joint retrospectives with shared service and product teams to recognise bottlenecks early and improve processes iteratively. This reduces friction and strengthens trust between the units involved.

Practical tip:

Conduct monthly or quarterly service reviews in which metrics are evaluated and improvement measures are decided upon.

- Work with clear improvement hypotheses (‘If we change X, we expect Y as a result’) to test measures in a targeted manner.

- Document findings and share them across the organisation to avoid duplication of work.

Figure: Tips for dealing with shared services and cross-functional teams

Conclusion

The decision between shared services and cross-functional teams depends on several factors: the degree of specialisation, the demand structure, the risks involved in task fulfilment and organisational maturity. Flow metrics such as work in progress (WIP), cycle time and throughput provide a data-driven basis for making this decision based on evidence rather than gut feeling.

Shared services score particularly well where specialised expertise is rare but critical, or where central standards must be adhered to. They can reduce risks, pool capacities and optimise costs, provided that their work is clearly prioritised, transparent and protected from overload by WIP limits.

Cross-functional teams reveal their strengths in environments where rapid iterations, direct communication and immediate availability are crucial. They shorten decision-making processes, increase autonomy and improve response times to customer feedback.

In many organisations, the optimal solution is not an either/or situation, but rather a hybrid structure. Frequently required skills are integrated into the team, while rare or highly specialised skills remain centrally pooled. This creates a balance between efficiency and responsiveness.

Ultimately, flexibility does not come from rigid structures, but from clear prioritisation, continuous adaptation and the conscious use of flow data. Those who consistently measure and visualise metrics and use them as a basis for improvement can achieve both: a stable flow in the value stream and the ability to respond quickly to change.

Notes:

Do you want to develop your company in a way that truly inspires users, customers and developers? Then simply network with Benjamin Igna on LinkedIn and find an approach that suits you and your context. Alternatively, you can also contact Stellar Work.

Would you like to discuss this topic as an opinion leader? Then feel free to share this article within your network or on social media.

Benjamin Igna has published three more articles on the t2informatik Blog:

Benjamin Igna

Benjamin Igna is a founder and consultant at Stellar Work GmbH. He has successfully led transformation projects and managed complex projects in the automotive and technology sectors, always with a focus on measurable results and operational efficiency. His expertise lies in aligning strategy and execution to drive sustainable organisational growth.

He also hosts the Stellar Work podcast, in which he profiles remarkable individuals who are redefining the boundaries of the product development process.

In the t2informatik Blog, we publish articles for people in organisations. For these people, we develop and modernise software. Pragmatic. ✔️ Personal. ✔️ Professional. ✔️ Click here to find out more.