Integration test first with Gherkin and Docker

Many software projects start with unit tests. That’s good, but often not enough. At the latest when several components interact, real databases are involved or interfaces need to be tested, it becomes clear that reality is more complex than a single function call.

In the following article, I will show how integration tests can be incorporated into the development process right from the start. Gherkin and Docker create a test environment that is technically robust, easy to understand and can be run automatically, thus ensuring greater security and quality throughout the entire system.

Unit tests between ideal and reality

Automated unit tests are a proven tool in software development for contributing to the quality of applications. They check the smallest building blocks of a software, i.e. individual components, modules or classes with their methods and functions, for correct behaviour. This ensures that the behaviour of these building blocks is maintained even after changes or refactorings.

Unit tests are an integral part of automated builds. They are an important quality criterion before changes can be incorporated into the central code base. That is why many projects place a strong focus on these tests. In combination with high test coverage, this is often considered a sign of good software quality.

In practice, however, unit tests do not always deliver the hoped-for time savings. They are undoubtedly of great value, but they also have limitations that often slow down development teams.

High adaptation effort in case of changes

Since unit tests check very small units, they often have to be adapted as soon as the architecture or a method changes. Especially in the case of refactoring, the effort required to get all tests running again can be considerable. As a result, some restructuring is postponed or avoided altogether due to time constraints, especially when a release is imminent.

Tests with a lot of mocking but little significance

The more closely you adhere to test-driven development and cut the units smaller and smaller, the more mock code is created. Classes then often only delegate calls that are tested, while real functionality is hardly checked at all. This raises the question of how meaningful such tests really are in the end.

Trivial cases overrepresented, borderline cases neglected

Often, simple scenarios are tested first and foremost because they are quick to implement and increase test coverage. Complex or rare edge cases, which only become apparent when several components interact, remain untested.

Untested loose ends

Certain areas such as user interfaces, file systems, databases or networks are usually omitted from unit tests. The reasons for this are the effort involved, technical limitations or long runtimes. This means that part of the application always remains untested, which only becomes apparent in manual tests.

Unit tests are available, but the software still does not work

Despite all efforts, it can happen that an application does not run properly after successful unit tests. This is because unit tests consider components in isolation and not their interaction. They are therefore important, but never sufficient to ensure that software functions correctly.

And how do integration tests work?

Even software with 100% test coverage can fail if its components do not work together properly. Unit tests provide valuable security on a small scale, but the big picture often remains untested. Integration tests close this gap. They check whether all components work together and whether the system as a whole performs as it should.

While unit tests look at isolated functions, integration tests test real processes across multiple layers, from the service to the database to the interface. This makes them more complex, but also much more meaningful.

In practice, this means that an application is tested in an environment that is as realistic as possible. Instead of mocked database accesses, a real database is used; instead of simulated interfaces, real API endpoints are addressed. This allows technical and functional errors to be detected at an early stage.

Of course, this approach comes at a price. Integration tests are more complex, require more infrastructure and runtime, and debugging them can be more challenging. However, the gain in confidence in the functionality of the overall system is considerable.

In order for integration tests to fully realise their added value, they should meet a few basic requirements:

Technical comprehensibility

Integration tests should be formulated in such a way that they are also comprehensible to people outside of development. Ideally, the test cases should be derived directly from the system requirements and describe technical scenarios.

Coverage of multiple layers

An integration test should cover as many layers of the system as possible. This is the only way to check whether data flows, interfaces and logic work together.

Automated execution

The tests should be able to run automatically from the outset. This ensures repeatability and enables easy integration into a CI/CD pipeline.

Maintainability and stability

Since integration tests are usually more extensive, a clear structure is crucial. Stable tests that are easy to adapt create trust and acceptance within the team.

If a test meets these requirements, it can provide valuable insights early on in the development process. It shows whether key functions interact as planned and whether architectural decisions are viable in practice.

This is precisely the approach I will take in the next step. Using a small application as an example, I would like to show how integration tests with Gherkin and Docker as the technical basis can be incorporated into a project from the outset.

Sample project: to-do list application

To illustrate the ‘integration test first’ approach, let’s take a look at a simple example: an application for managing and sharing to-do lists. It is well suited for mapping the structure of a real system while keeping the scope manageable.

Requirements for the example system

The application should be available in a web browser and provide basic functions for users and to-do lists:

- Users can create their own to-do lists.

- A to-do list can be shared with other users.

- Each user can see both their own and shared to-do lists.

- A shared to-do list shows which user originally shared it.

These requirements form the basis for subsequent testing. They are deliberately kept simple, but represent typical technical scenarios in which several system layers interact – from data storage and logic to the interface.

Technology stack

As this is a web application, proven technologies from the .NET environment are used. They enable a clean separation between backend, frontend and database, as well as easy integration into automated test environments.

- Frontend: Angular

- Backend: ASP.NET Core Web API

- Database: PostgreSQL

- Test frameworks: XUnit, Reqnroll (as Gherkin implementation)

- Infrastructure: Testcontainers for Docker

In the following, we will focus on the backend and the integration of the tests with the database. The frontend is briefly mentioned in the outlook, but plays a minor role in understanding the test setup.

This lays the foundation for the next step, which is to dive into the concrete implementation and show how integration tests with Gherkin and Docker can be realised in practice.

Implementing the sample project

To implement the project, we start with a clean project structure and the basic dependencies. The goal is to create an environment in which the tests can be executed right from the start and can later be easily integrated into a CI/CD pipeline.

Project structure and preparation

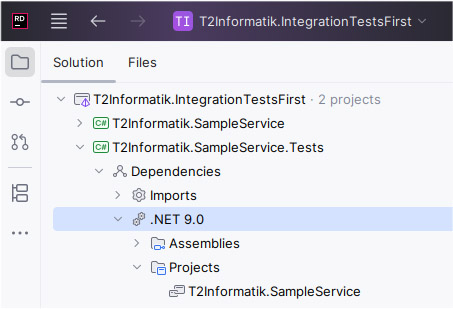

We create two projects:

- an ASP.NET Core Web API project for the actual service

- an XUnit test project for the integration tests

The test project references the Web API project in order to work directly with its code and interfaces.

Figure 1: Creating a project structure

To get started, you will need a few NuGet packages to enable database access, test execution and infrastructure:

Web API project:

- Npgsql.EntityFrameworkCore.PostgreSQL

- Microsoft.EntityFrameworkCore.Design

Test project:

- FluentAssertions – for meaningful and readable test expressions

- Microsoft.AspNetCore.Mvc.Testing – for starting the Web API in the test environment

- Npgsql – for database access

- Reqnroll.Tools.MsBuild.Generation

- Reqnroll.xUnit – for executing Gherkin tests

- Testcontainers.PostgreSql – to automatically provide a Docker container for the tests

In addition, we recommend the Reqnroll plugin for Visual Studio or JetBrains Rider. It offers syntax highlighting for feature files and allows you to jump directly between scenarios and C# test steps.

First Gherkin test

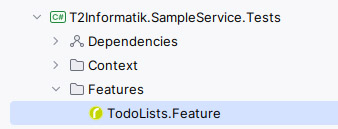

Once all packages have been installed, a new file with the extension .feature is created in the test project. It describes the desired behaviour of the application in Gherkin syntax.

Figure 2: Creating a file with the extension .feature

An example of such a feature is:

Feature: Todo Lists

This feature is about handling todo lists for different users, including sharing.

Background:

Given The system has the following users with the following owned todo lists

| UserName | Owned Todo Lists |

| Theo | "Theos Todos 1", "Theos Todos 2" |

| Tina | |

Rule: Owned Todos are only for the owners

Scenario: Create a new todo

When "Tina" has created the todo list "Tinas Todos"

Then "Theo" has the todo lists "Theos Todos 1" and "Theos Todos 2"

And "Tina" has the todo list "Tinas Todos"

Rule: Shared todos are visible for shared users.

Scenario: Share todo with a user

When "Theo" shares "Theos Todos 1" with "Tina"

Then "Theo" has the overall todo lists

| Name | Shared From |

| Theos Todos 1 | |

| Theos Todos 2 | |

And "Tina" has the overall todo lists

| Name | Shared From |

| Theos Todos 1 | Theo |Reqnroll is the C# implementation of the Gherkin language [1] for running BDD. It offers connections to common test frameworks such as NUnit, MSTest or, as in our case, XUnit. Ultimately, one or more XUnit tests are generated from each feature file, which can be easily executed using the Visual Studio test runner, dotnet test or Rider. A source generator from Reqnroll takes care of generating the XUnit tests from the feature files.

And how is the feature file structured? The easiest way to explain this is with individual keywords:

Feature: The title or name of the feature file. Here, you should choose a title that corresponds to a technical feature.

Scenario: Corresponds to a test case with a set of steps consisting of Given/When/Then clauses. A test is generated from a scenario. The name of the scenario forms the technical name of the unit test.

Given/When/Then: Each Given/When/Then line is a so-called test step and serves to formulate the scenario with meaningful text. Placeholders can be used as parameters within such a step. These can be text (marked by quotation marks) or tables, for example.

Rule: Description of a business rule that is assigned to one or more scenarios. This allows different scenarios to be divided into groups and informs the reader of the focus.

Background: The Given/When/Then steps described in the background describe behaviour that should be executed beforehand for all scenarios in this feature. The background can be used, for example, to describe master data in the system.

And how does Gherkin know what should happen in each step? The answer can be found in the StepDefinitions.

StepDefinitions: The feature files themselves are independent of the programming language used and are used to formulate test cases. Reqnroll generates one XUnit fact per scenario, which is composed of Given/When/Then steps.

The Given/When/Then steps must be implemented by a developer. To do this, classes are defined in Reqnroll that are linked to the test steps by means of code attributes. These classes can be defined with attributes manually or using the Reqnroll plugin. In the first step, a new file must be created. We generate all steps in a Steps.cs:

using Reqnroll;

namespace T2Informatik.SampleService.Tests;

[Binding]

public class Steps

{

[Given("The system has the following users with the following owned todo lists")]

public void GivenTheSystemHasTheFollowingUsersWithTheFollowingOwnedTodoLists(Reqnroll.Table table)

{

ScenarioContext.StepIsPending();

}

}The generated method, which can also be renamed, is simply linked to the previously selected Given. The table is recognised and Reqnroll generates code so that we receive the data as a table object. And ScenarioContext.StepIsPending() ensures that the tests fail.

Dealing with StepDefinitions

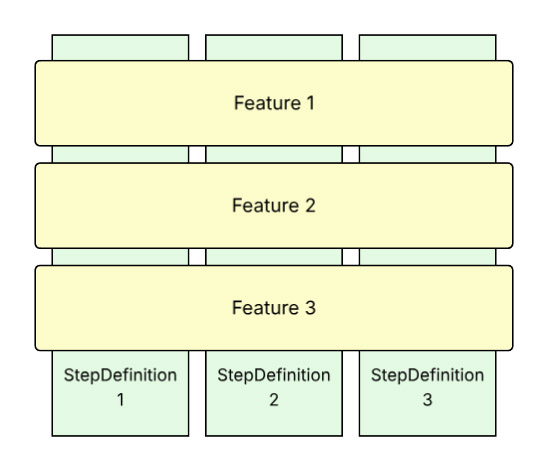

As a rule of thumb, feature files are orthogonal to StepDefinitions:

Figure 3: Feature files and StepDefinitions

StepDefinitions form the bridge between feature files and technical implementation. Several differently formulated steps can refer to the same method by binding several Given, When or Then attributes to a method. This results in increasing reuse over time, and internal refactorings are possible without having to adapt the tests themselves.

Once the tests have been defined and the StepDefinitions have been created as empty methods, the next step is to set up the test infrastructure. To do this, it is important to understand the service in more detail in order to set up the environment correctly and completely.

Setting up the service project

The service project uses PostgreSQL in combination with Entity Framework Core in a code-first approach. This means that the database and schema are generated from the service.

For simplicity, the sample code in the controllers accesses the entity model directly. However, custom ViewModel objects are returned to the client or received from there.

A simplified example of how all users are read from the system via GET:

using Microsoft.AspNetCore.Mvc;

using Microsoft.EntityFrameworkCore;

using T2Informatik.SampleService.PersistenceModel;

namespace T2Informatik.SampleService.Controllers;

public record UserReadViewModel(int Id, string UserName);

[ApiController]

[Route("api/[controller]")]

public sealed class UsersController(SampleServiceDbContext dbContext) : ControllerBase

{

[HttpGet]

public async Task<IActionResult> GetAll()

{

var viewModels = (await dbContext.User.ToListAsync())

.Select(user => new UserReadViewModel(user.Id, user.UserName));

return Ok(viewModels);

}

}The other endpoints are implemented in the same style. In a real project, you would not normally access the persistence layer directly in the controllers. For this article, the structure has been deliberately simplified so that the focus remains on the tests.

Creating the test infrastructure

Since we are concentrating on the backend, we first define the conditions under which the tests are to run:

- A PostgreSQL database is started as a Docker container.

- A test service is created using the .NET test framework, which is accessed via HttpClient.

- For each test, a database with the required schema is initialised.

- All test data is explicitly created in the test. There is no ‘magic’ master data in the background and no externally assumed inventories.

- The StepDefinitions communicate exclusively with the backend via HttpClient.

- All calls via HttpClient use official backend interfaces, i.e. the same endpoints that the frontend would use.

- Direct access to the database is not permitted during the test.

Test run

Based on these rules, the following test procedure results:

1. The PostgreSQL container is started before all tests.

2. For each individual test, a new database with a unique name is created, a test service is created and started with the database connection, the test is executed, and the created database is deleted again.

3. After all tests, the Docker container is shut down.

In this way, we minimise side effects between tests. Tests can run sequentially or even in parallel without affecting each other.

Creating and deleting Docker containers

There is a separate class, TestBed, for handling the database container. It is valid across all test runs, starts and stops the Docker container, and provides a prepared TestCase object for each test using CreateTestCaseAsync().

using Testcontainers.PostgreSql;

namespace T2Informatik.SampleService.Tests.Context;

public class TestBed : IAsyncLifetime

{

private PostgreSqlContainer? _postgreSqlContainer;

public async Task<TestCase> CreateTestCaseAsync()

{

if (_postgreSqlContainer == null)

throw new NullReferenceException("Postgresql Container not build");

await _postgreSqlContainer.StartAsync();

var testContext = new TestCase(_postgreSqlContainer.GetConnectionString());

return testContext;

}

public Task InitializeAsync()

{

_postgreSqlContainer = new PostgreSqlBuilder()

.WithImage("postgres:17.5")

.WithName("SampleServiceDatabaseContainer")

.WithUsername("test")

.WithPassword("test")

.Build();

return Task.CompletedTask;

}

public Task DisposeAsync() =>

_postgreSqlContainer != null

? _postgreSqlContainer.DisposeAsync().AsTask()

: Task.CompletedTask;

}Creating database and service

The actual database for a test is created in TestCase. This class is responsible for creating a separate database for each test run, starting the service, and providing an HttpClient.

using System.Text;

using System.Text.Json;

using System.Text.Json.Serialization;

using FluentAssertions.Execution;

using Microsoft.AspNetCore.Mvc.Testing;

using Microsoft.Extensions.DependencyInjection;

namespace T2Informatik.SampleService.Tests.Context;

public class TestCase : IAsyncDisposable

{

private readonly WebApplicationFactory<Program> _factory;

public TestCase(string connectionString)

{

var connectionStringBuilder = new Npgsql.NpgsqlConnectionStringBuilder(connectionString)

{

Database = Guid.NewGuid().ToString("N"),

};

_factory = new SampleServiceWebApplicationFactory(connectionStringBuilder.ToString());

HttpClient = _factory.CreateClient(

new WebApplicationFactoryClientOptions { AllowAutoRedirect = false }

);

}

public async Task InitializeAsync()

{

using var scope = _factory.Services.CreateScope();

var dbContext = scope.ServiceProvider.GetRequiredService<SampleServiceDbContext>();

await dbContext.Database.EnsureCreatedAsync();

}

public HttpClient HttpClient { get; }

public ValueTask DisposeAsync() => _factory.DisposeAsync();

}Essentially, TestCase receives the connection string from TestBed, uses it to create a new database with a random name, starts a web application, and creates an HttpClient, which we use in the tests.

Configuring WebApplicationFactory

The SampleServiceWebApplicationFactory class is a typical pattern from the ASP.NET Core Framework [2] for starting a web service in tests.

using Microsoft.AspNetCore.Hosting;

using Microsoft.AspNetCore.Mvc.Testing;

using Microsoft.Extensions.Configuration;

namespace T2Informatik.SampleService.Tests.Context;

internal sealed class SampleServiceWebApplicationFactory(string connectionString) : WebApplicationFactory<Program>

{

protected override void ConfigureWebHost(IWebHostBuilder builder)

{

var configurationValues = new[]

{

new KeyValuePair<string, string?>("ConnectionStrings:Database", connectionString),

};

var configuration = new ConfigurationBuilder()

.AddJsonFile("appsettings.json")

.AddInMemoryCollection(configurationValues)

.Build();

builder

.UseConfiguration(configuration)

.ConfigureAppConfiguration(configurationBuilder =>

configurationBuilder.AddInMemoryCollection(configurationValues)

);

}

}A practical feature here is that the service configuration is set via an in-memory collection. This allows us to retain full control over the settings used and avoid side effects caused by other configuration files.

Helper functions for HttpClient

To ensure that the tests remain easy to read, there is an extension class for HttpClient. It encapsulates the most important calls and provides meaningful error messages if a request fails unexpectedly.

using System.Text;

using System.Text.Json;

using System.Text.Json.Serialization;

using FluentAssertions.Execution;

namespace T2Informatik.SampleService.Tests.Context;

public static class HttpClientExtensions

{

private static readonly JsonSerializerOptions SerializerOptions = new()

{

PropertyNameCaseInsensitive = true,

Converters = { new JsonStringEnumConverter() },

};

public static async Task PostAsync<T>(

this HttpClient client,

string route,

T body

)

{

var content = GetContent(route, body, "post");

var response = await client.PostAsync(route, content);

await EvaluateStatusCodeAsync(response, route, "POST");

}

public static async Task<T> GetAsync<T>(this HttpClient client, string route)

{

var response = await client.GetAsync(route);

if (!response.IsSuccessStatusCode)

{

throw new AssertionFailedException(

$"GET Request to {route} failed with status code {response.StatusCode}"

);

}

return await DeserializeResponseAsync<T>(response, "GET", route);

}

private static async Task EvaluateStatusCodeAsync(

HttpResponseMessage response,

string route,

string method

)

{

if (

!response.IsSuccessStatusCode

)

{

var stream = await response.Content.ReadAsStringAsync();

throw new AssertionFailedException(

$"{method} Request to {route} failed with status code {response.StatusCode}. Message: {stream}"

);

}

}

private static StringContent? GetContent<T>(

string route,

T body,

string method

)

{

if (body == null)

throw new InvalidOperationException($"{method} '{route}' with null body not allowed");

var json = JsonSerializer.Serialize<object>(body, SerializerOptions);

return new StringContent(json, Encoding.UTF8, "application/json");

}

private static async Task<T> DeserializeResponseAsync<T>(

HttpResponseMessage response,

string method,

string route

)

{

var stringContent = await response.Content.ReadAsStringAsync();

var result = JsonSerializer.Deserialize<T>(stringContent, SerializerOptions);

if (result == null)

{

throw new AssertionFailedException(

$"Cannot deserialize {method} {route} to type {typeof(T).FullName}"

);

}

return result;

}

}This makes it very easy to formulate a GET request, for example:

var todoLists = await httpClient.GetAsync<TodoListReadViewModel[]>("api/TodoLists");We receive the ViewModel types directly from the service, where they are also used for serialisation and deserialisation.

Note: I deliberately chose to hard-code the tests to the ViewModel types of the service. Alternatively, the types could be duplicated in the test project to achieve loose coupling. The advantage of the chosen variant is that Incompatibilities are noticed immediately during compilation and not only at runtime.

Integration into the Reqnroll process

With TestBed, TestCase and HttpClient, the technical basis for the tests has been laid. The next step is to control the process before and after the tests. This makes the implementation of the test steps much easier and follows a clear pattern. Reqnroll supports this with its tooling.

Hooks: Hooks are used in Reqnroll to execute your own code before or after certain phases of test execution. For our scenario, BeforeTestRun, AfterTestRun, BeforeScenario and AfterScenario are particularly interesting.

As with TestSteps, we place the hooks in a separate class Hooks, use the corresponding code attributes and implement methods. First, the frame before and after all tests:

using Reqnroll;

using Reqnroll.BoDi;

namespace T2Informatik.SampleService.Tests.Context;

[Binding]

public sealed class Hooks

{

private static TestBed? _testBed;

[BeforeTestRun]

public static async Task BeforeTestRun()

{

_testBed = new TestBed();

await _testBed.InitializeAsync();

}

[AfterTestRun]

public static async Task AfterTestRunAsync()

{

if (_testBed != null)

{

await _testBed.DisposeAsync();

}

_testBed = null;

}

}We then add the setup and cleanup for each scenario to the hooks. Here, a separate TestCase is created for each scenario, which registers the HttpClient and cleans it up again at the end:

using Reqnroll;

using Reqnroll.BoDi;

namespace T2Informatik.SampleService.Tests.Context;

[Binding]

public sealed class Hooks(ScenarioContext scenarioContext, IObjectContainer container)

{

// ... Code aus dem vorherigen Listing.

[BeforeScenario]

public async Task BeforeScenarioAsync()

{

if (_testBed == null)

throw new ArgumentNullException(nameof(_testBed));

var testCase = await _testBed.CreateTestCaseAsync();

scenarioContext.Set(testCase);

container.RegisterInstanceAs(testCase.HttpClient);

await testCase.InitializeAsync();

}

[AfterScenario]

public async Task AfterScenarioAsync()

{

var testCase = scenarioContext.Get<TestCase>();

if (testCase != null)

{

await testCase.DisposeAsync();

}

}

}Reqnroll supports dependency injection in bound classes. By default, ScenarioContext and the DI container IObjectContainer can be requested via constructor.

IObjectContainer is used to register additional types that are to be injected into test classes later on. In our case, this is the HttpClient from the TestCase.

We use the ScenarioContext to store and retrieve information during a scenario, for example, the current TestCase. Since it only applies for the duration of a scenario, it is well suited for encapsulating such data and cleaning it up safely after the test run.

Implementation of the test steps

Once the infrastructure is in place, we can implement the test steps. To do this, we only need the parameters of the Gherkin steps and the HttpClient, which we receive via the constructor.

We start with the background step, which creates the users and their to-do lists:

using FluentAssertions;

using Reqnroll;

using T2Informatik.SampleService.Controllers;

using T2Informatik.SampleService.Tests.Context;

namespace T2Informatik.SampleService.Tests;

[Binding]

public class Steps(HttpClient httpClient)

{

[Given("The system has the following users with the following owned todo lists")]

public async Task GivenTheSystemHasTheFollowingUsersWithTheFollowingOwnedTodoLists(Table table)

{

foreach (var row in table.Rows)

{

await httpClient.PostAsync("api/Users", new UserWriteViewModel(row["UserName"]));

var userId = await GetUserIdFromNameAsync(row["UserName"]);

var todoLists = row["Owned Todo Lists"]

.Split(',')

.Select(t => t.Trim(' ', '"'))

.Where(t => !string.IsNullOrWhiteSpace(t));

foreach (var todoListName in todoLists)

{

await httpClient.PostAsync($"api/Users/{userId}/TodoLists",

new TodoListWriteViewModel(todoListName));

}

}

}

private async Task<int> GetUserIdFromNameAsync(string userName) =>

(await httpClient.GetAsync<UserReadViewModel[]>("api/Users"))

.Single(u => u.UserName == userName).Id;

}The primary constructor requests the HttpClient, which was provided in the scenario via the hooks. In the step itself, we call the REST API of the backend, create a user for each table row and generate the corresponding to-do lists.

The information from the table is processed manually here. Alternatively, the rows could also be serialised into separate object types. The simple variant is easy to read for this example, but automatic assignment may be more useful for larger tables.

Essentially, the code iterates through all rows, creates a user for each row, and then generates all to-do lists from the second column.

Next is a step that performs a check. Here is the example:

Then "Theo" has the todo lists "Theos Todos 1" and "Theos Todos 2"`:

[Then("{string} has the todo lists {string} and {string}")]

public async Task ThenHasTheTodoListsAnd(string userName, string todoListName1, string todoListName2)

{

var userId = await GetUserIdFromNameAsync(userName);

var todoListNames = (await httpClient.GetAsync<TodoListReadViewModel[]>($"api/Users/{userId}/TodoLists"))

.Select(t => t.Name)

.OrderBy(t => t)

.ToArray();

todoListNames

.Should()

.BeEquivalentTo(

new[] { todoListName1, todoListName2 }

.OrderBy(t => t));

}The remaining steps follow the same pattern:

- Use the HttpClient to access the backend for reading or writing.

- Perform the necessary assertions.

- If necessary, use the ScenarioContext to exchange information between steps.

Showing all test steps in detail would be repetitive at this point. The structure shown is sufficient for understanding the concept.

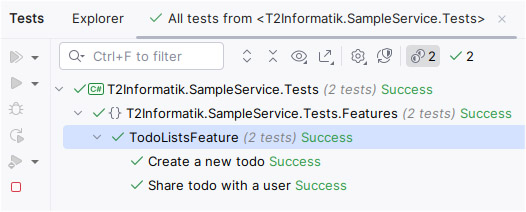

Figure 4: Result after test run

Once all tests have been implemented, the entire solution can be built and the tests can be run. The process usually takes only a few seconds. The longest part is usually the one-time download of the Docker image. It is particularly convenient if the same Docker version is used for the service in development as in the tests.

Outlook: Integration tests taken further

The approach shown forms the basis for integrating integration tests into the development process right from the start. Gherkin, Reqnroll and Docker create a test environment that is technically understandable, robust and can be executed automatically.

Of course, this structure can be expanded as desired. An obvious further development would be for the product owner or the specialist team to formulate the feature files themselves. In this way, acceptance criteria could be implemented directly as automated tests – a real step towards ‘living documentation’.

Another useful step is to integrate the tests into the CI/CD pipeline. Modern build systems such as GitHub Actions, GitLab CI or Azure DevOps support Docker environments without any problems. This allows the tests to be run automatically with every build, which significantly speeds up feedback on changes.

Authentication can also be easily added. For example, if a JWT token is used for requests, it can be generated in the test context and sent via the HttpClient. This allows scenarios with user roles or permissions to be tested realistically.

Finally, Gherkin offers the option of using existing feature files as a basis for end-to-end testing. This allows the same business scenarios to be revalidated at the system level – only this time with an integrated front end. The effort remains manageable, as many test modules and definitions can be reused.

This shows that integration tests are not only an additional quality assurance mechanism, but also an effective tool for continuously harmonising architecture, logic and business functionality.

Notes:

You are welcome to view the sample project in detail, clone it and adapt it for your own purposes.

[1] Cucumber: Gherkin Reference

[2] ASP.NET Core Framework

Here you will find an article about unit testing with AI.

Peter Friedland has published some more articles here on the t2informatik Blog, including:

Peter Friedland

Software Consultant at t2informatik GmbH

Peter Friedland works at t2informatik GmbH as a software consultant. In various customer projects he develops innovative solutions in close cooperation with partners and local contact persons. And in his private life, the young father plays the cello in the Wildau Music School orchestra.

In the t2informatik Blog, we publish articles for people in organisations. For these people, we develop and modernise software. Pragmatic. ✔️ Personal. ✔️ Professional. ✔️ Click here to find out more.