From Monolith to Microservices

Do you have experience in breaking up monoliths or, better still, in slow dismantling and decoring of monoliths? I would like to describe a path we have taken over the last 5 years. During these 5 years, we have gradually dismantled an existing monolith and pursued the goal of slowly developing the existing architecture into a microservice technology. During this time, we have not only overcome technical difficulties, but have also had to adapt many of our approaches to the new challenges. I’m reporting here mainly on technical rebuilds – but of course the priority was to evolve the business logic. Technology was and is only a means to an end.

Starting Point: The Business Process

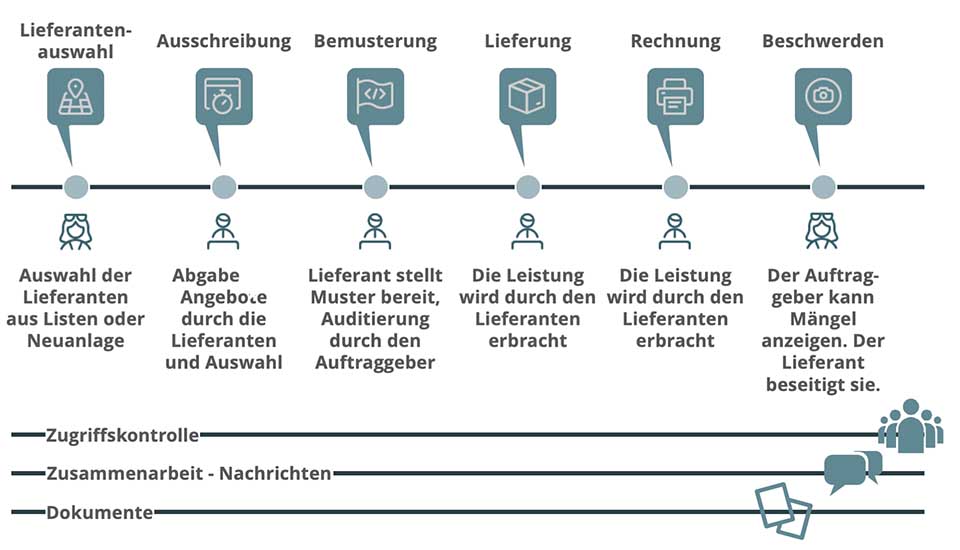

First of all I would like to introduce the business process that we supported with our software. The measures described below would look different in a less complex system. Therefore, it is always important to keep an eye on the underlying business process. The system under consideration is a system that allows companies to create tenders and allows suppliers to participate in these tenders.

In addition to the main business processes supplier selection, tendering, sampling, delivery, invoice and complaint process, there were the supporting processes access control, collaboration and document management.

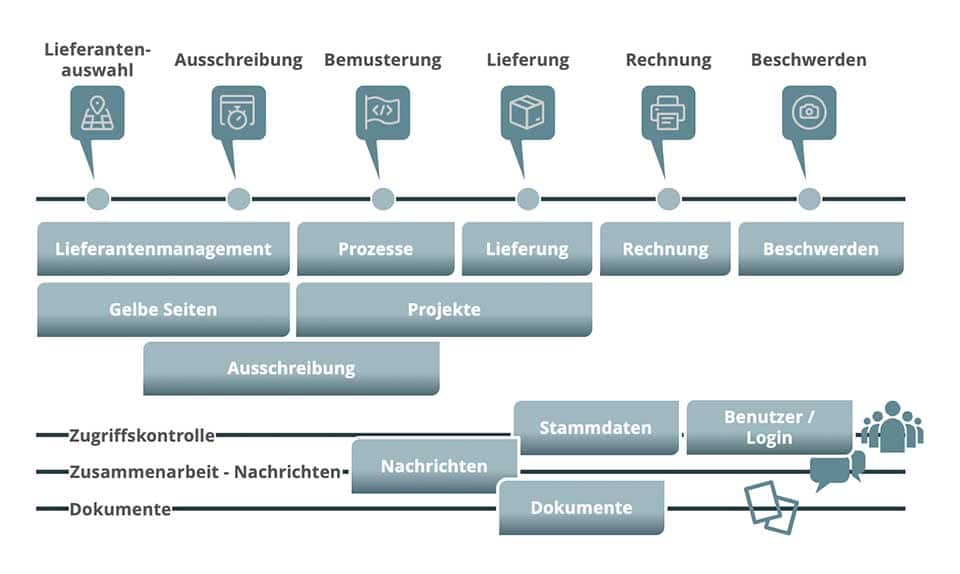

This business process was supported by several applications. The applications had grown historically and depicted the business process as a patchwork rather than a series of technical processes.

As you can see in the second figure, the individual applications do not adequately cover the business processes – partly overlapping, partly with gaps. This was not clear to me at the beginning. This clear picture only emerged over the years. Now I know that this service cut that did not correspond to the business process was one of the reasons for the problems we had. But I pre-empt.

System instablilities

The applications mentioned were distributed over two large servers.

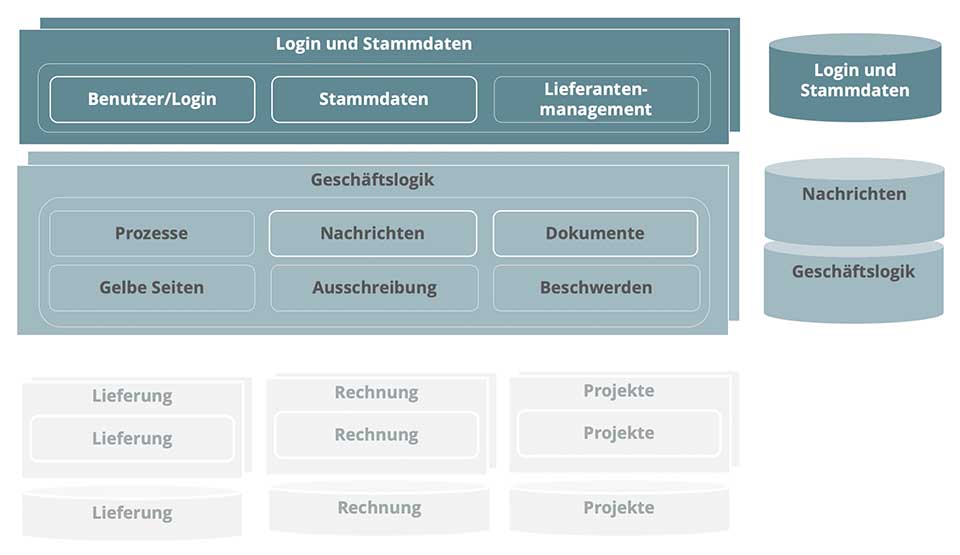

The applications user/login, master data and supplier management were installed in a deployment unit within a Java Virtual Machine (JVM) on the first server pair. The second cluster contained the main business applications processes, yellow pages, tenders and complaints. But also the supporting processes documents and messages were installed in the same JVM. A very first tiny step was already done at this point – the messages already had their own database.

The applications delivery, invoice and projects were already installed on their own clusters with their own databases. These are not reported in the following.

The following diagram also shows to what extent the individual applications were interdependent:

Here you can see that the processes supplier selection and tendering in particular did not only extend across several applications, but also across both clusters. This non-compliance with the architectural principle of “Separation of Concerns” led, among other things, to unsustainable instabilities – in other words, to failures. Of a planned availability of 95%, we were only able to achieve just under 90%. I believe everyone can vividly imagine the corresponding discussions in operation and development.

First aid

After these experiences we already knew that we had to change something in principle. But at that time we neither knew exactly what nor how! For us this meant that we first had to stabilize the patient so that we had enough time to change something real. Since the main load was on the business logic cluster, we doubled the number of servers there.

However, the applications were not prepared for such horizontal scaling. We had to make minor changes in many places to make this small stabilisation measure possible at all (not automatically, of course, but simply manually from 2 to 4). However, with 4 it also ended, because otherwise the internal synchronisation of the individual nodes would have cost too much performance. It was a first aid measure with which we could survive for at least the following months.

Very first small steps

Of course the world doesn’t stand still just because we had to solve technical problems. New functions and applications were needed. The application processes had to be replaced by a new implementation and we had to support the audit process with software. We knew that we could not simply implement these applications into the existing applications. This would have made the chaos complete. Further access via new applications would have endangered the JVMs in the respective clusters (and a simple restart already cost at least half an hour). At this point, I was already convinced that only a consistent microservice approach could help. However, it was difficult to implement such a radical approach to management. But the applications were at least built as separate deployment units, even if they didn’t (yet) use their own database.

Further system instabilities

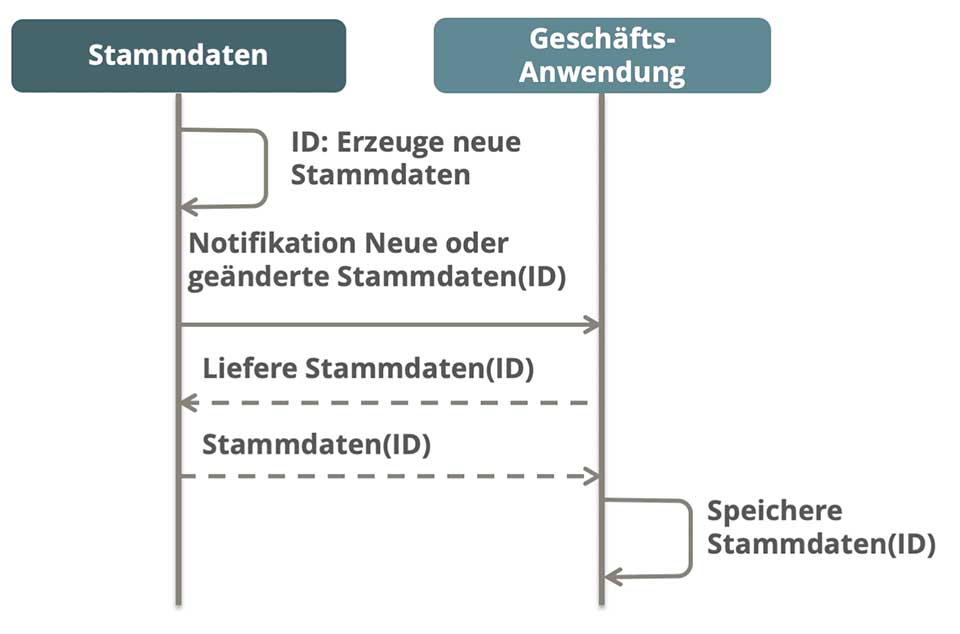

Fortunately, we were successful with our new applications. But it didn’t bode well for the overall system. More and more up-to-date master data was needed for the new applications. This master data was recorded in the master data application on the ” login and master data ” cluster and then distributed via Oracle streams to the master data-using applications on the business logic, audit and processes clusters. In a short time, more master data was recorded and distributed than before. The streams were no longer able to distribute them and both databases were down. We were also unable to meet specific requirements for the speed of synchronisation with this approach. Again we used approaches of the world of microservice.

We implemented an asynchronous master data distributor:

A user or an external service can create master data – e.g. new supplier companies – in master data management. Services that have booked this service (subscribed) are informed of the corresponding change with a unique identification (ID). The corresponding service can now – if it suits 😊 – retrieve the data with the ID from the master data service and store it locally.

By decoupling business logic and master data service, we were able to stabilise the overall system considerably. Furthermore, the performance could be significantly increased, as critical changes such as the deletion of a supplier could be prioritised. The overall performance of the system was also increased by the fact that the dependent services were no longer forced to store the master data in the logic of the master data service. Rather, they were able to store the data as they needed it, avoiding unnecessary mapping, reformatting, etc. at runtime.

Master data service and business logic were now decoupled. But the complexity had now shifted to communication.

Online Deployment

But as life plays like this, there is always something to do (again). We had achieved a stability that made our customers painfully aware that our downtime times were far too long. As long as you’re fighting unplanned downtime, you don’t care much about the planned downtime. But the unplanned downtime was no longer there – so we were forced by the expectations of our customers to find a solution for the planned downtime. For me, this solution meant an online deployment using a blue-green approach with a database.

- Both branches and the database are on the same version. Only one branch (green) is accessible from the outside.

- The database is upgraded to the new version. This must be done online and the old version must be able to work with the new version.

- The isolated branch will be upgraded to the new version and tested. These tests are both automatic and manual exploration tests.

Both branches will be released to the outside. This means that both users with the original version 1.0 and users with the new version 1.1 access the system. New users are always routed to the new version. Users who are in the system remain on the old version until they log off. - After some time, the users on the old version have disappeared from the system. The branch with the old version is now sealed off from the outside (blue).

- The blue branch is lifted to the new version and the system is ready for the next upload.

Such an approach allows users to always access the system. Users do not lose any input because they only access the new system after a new login. In the event of errors in the new version, it is quick and easy to switch back to the previous version.

Simple and uncomplex deployments are a prerequisite for such an approach. This means that changes must be small – large changes usually lead to large, complex deployments. Therefore you have to release online deployments more often, so that the deployments don’t become too complex.

The higher infrastructure costs resulting from doubling the number of server nodes are usually saved through savings on weekend work and special deployments in the event of unplanned downtimes.

Decoupling the business logic

At last the massive instabilities and the eternally long productive positions at the weekend were history. But now we had to take action. Higher loads on the system were already foreseeable and thus the instabilities came back guaranteed. Therefore, the only way was to further develop the microservice ideas and to further decouple the business logic.

We knew that the highest load was on the tender component. It was therefore obvious to remove the tender component from the deployment unit of the business logic. This was opposed by a very close coupling of the tender component and the document component. That’s why we separated the two together. However, this only made sense if we also separated the databases. The resulting architecture shows the following picture:

The component decoupling brought further stabilisation and better performance of the overall system. I also hoped that this architectural decoupling would improve the maintainability of the individual applications. However, this hope broke down very quickly. A weak point of the original design now became apparent. Common functionalities were combined in central components. These were installed as a separate archive (ear) in each deployment unit. This made it necessary to test the entire stack even with the smallest changes. This was an extreme effort, which led to further errors. Even more, these common components are extremely tattered over time. Any functionality that might have been reusable was placed here. This led to a close coupling of the components “through the back door”.

Of course we had to break up this tight coupling. This was not a technical requirement – we could have lived on with this convenient “developer-friendly” solution. Above all, it was economic considerations that forced us to make a change. Regression tests of the entire system for each release are simply too expensive. And these central components were definitely not low-maintenance – who would find a functionality that was implemented a year ago and that nobody uses anymore?

In the meantime, our number of servers had risen from 4 to 28 – I too was sometimes overwhelmed by what was going on. This could not be done manually anymore. Therefore an automation of the deployment activities was obvious. We built an automatic deployment pipeline with further test automation. Deployment automation was another driver for breaking the link between the central components.

The central components were now simple libraries that were included in the construction of the applications during automatic building with strict version control.

This allowed us to achieve automatic deployment through to production, with production deployment having to be manually approved by the person in charge using the four-eyes principle.

Petit fours

Anyone who has met me knows I’m a little sweet tooth. I like petit fours. Maybe this is also right for software architectures; cakes and sweets only taste good when they’re small.

At that time we had the constant fire extinguishing and troubleshooting behind us. Finally, finally we could pro-actively design the architecture. I had the following considerations at that time: What do you have to be able to scale simply (and as automatically as possible) because it reacts most sensitively to load? This was the login component. The ultimate goal was to be able to scale the login components automatically – depending on the load – horizontally.

So we removed the login component. User and master data administration remain in the master data cluster. The login component also had its own database. It was thus the first component to deserve the name ” microservice”. My first – hard-earned – ” petit four”.

Everything much too expensive

Looking at this development, we have gone from an initial 4 servers and 3 databases to 36 servers and 6 databases. Anyone who sees this will bang their hands above their heads and declare me totally crazy. To be honest, my boss at that time was shortly before 😉. But we had proved that we were more stable. We had proven that we can react faster to change. And we had proven that we could save testing effort by more specifically testing the separated services.

Of course, more servers cost more money. However, the additional servers could be much smaller. The existing servers could also be reduced in size. But not so much that they could absorb the additional costs for the additional costs. The real savings were achieved by the cost drivers “weekend work” and “troubleshooting”. Because these were 0. Thus we could reach a very fast profitability. In the end, the initial infrastructure costs could be reduced to 50%.

Now what?

We had really big applications that really deserved the name monolith. Even minor changes were expensive and risky. We had achieved a lot over the years. But we saw that the way was only limited. A reimplementation of the essential applications ” tender ” and ” yellow pages ” was necessary.

This reimplementation can no longer be carried out by traditional means – we had felt the effects of the dead ends painfully. Rather, the path led to the cloud with a genuine microservice approach. You can concentrate on the functionalities and leave the infrastructure to the experts. However, a good business analysis and thus a meaningful cut of the services are a basic prerequisite for successfully developing business applications in the cloud.

Conclusion

Do you always have to smash a monolith or take it apart slowly, as in the present example? Definitely no. As the report shows, good reasons have led us to take the monolith apart. Stability, performance, automation, testability, maintainability are good reasons to use microservices. The improvement of this non-functional requirement must be weighed against the growing complexity of communication.

Microservices are a recommended way to meet non-functional requirements. In order to cope with the growing complexity, a high degree of automation is required. In the end, one has to balance stability, automation efforts and the control of increased operational complexity.

Notes:

Dr. Annegret Junker has published more articles in our t2informatik Blog:

Dr. Annegret Junker

Dr Annegret Junker works as Chief Software Architect at codecentric AG. She has been working in the software industry for over 30 years in various roles and different domains such as automotive, insurance and financial services. She is particularly interested in DDD, microservices and everything related to them.