Completeness of specifications – A new approach

Table of Contents

Natural language as a specification element

Modelling as a specification element

Is the truth in the source code?

The essential elements of systematic development in safety-related projects

The dilemma of specifications

Non-instruvie measurement of structural coverage

Non-intrusive technology for proving data and control flow

Conclusion

Specifying systems is still one of the major challenges and sources of errors in development. Even model-based development cannot really solve the specification problem. A model defines in detail how a system works (architecture), but not what the system does (function).

The completeness of specifications is one of the central problems. The article is dedicated to this aspect and discusses approaches on how to systematically complete specifications in the future, especially in safety-relevant projects.

Natural language as a specification element

Natural language is an essential element for the creation of specifications. The great advantage of natural language is that it is understood by everyone. It is the essential means of communication between us humans. However, language is not used to communicate clearly, correctly and completely. There are many good reasons why this is good and correct for our everyday life.

For the specification of a system to be developed, on the other hand, unambiguity, correctness and completeness are very important. In the last decades, requirements engineering has developed some techniques to describe systems reasonably unambiguously, correctly and completely using natural language. However, the quality achieved in this way is not sufficient, especially for safety-relevant systems.

Modelling as a specification element

In recent years, model-driven development has advanced significantly in software development. At first glance, models are also much better suited to specifying unambiguous, correct and complete systems. This is due to the fact that models are based on mathematical rules.

However, the many model-driven development projects also show that models cannot replace natural-language requirements specifications. Rather, models and natural language requirements specifications complement each other. A model essentially defines how a system works. Natural language requirements specifications have their strength in describing the functionality of a system (what).

Is the truth in the source code?

Many developers and project managers who have had bad experiences with requirements specifications tend to say: “The truth is in the source code!” This sentence implies that one can ultimately do without the effort of developing requirements specifications and their documentation. At first glance, the agile development approaches also aim in this direction.

But if you take a closer look at agile methods, you quickly realise that requirements specifications are even a central element. Every sprint planning is based on clearly identifying the functions to be implemented. The agile world also does not allow you to just code away.

Agile projects are much more characterised by the fact that there are very short, iterative development loops. This results in quick feedback and any misunderstood requirements specifications are soon corrected.

With the complexity of today’s systems, uncoordinated programming would foreseeably lead to chaos. The failure of the project would only be a matter of time.

The enormous functional scope of even simple systems through the software forces a systematic approach.

The essential elements of systematic development in safety-related projects

Almost 40 years ago (1983), the standard for the development of safe software in aviation RTCA DO-178 was released. This was the first standard to define the development process and results with the aim of systematically reducing errors in a software to an acceptable number.

In 1998, the first version of IEC 61508 was published. The merit of this standard was that it defined a functional safety standard for all industrial sectors that did not have an industry-specific standard. In 2011, the second version of IEC 61508 was published, and today many industries have their own functional safety standards.

Even though each standard has its own focus, some process steps, data and documents have emerged over the last decade that are now uncontroversial for high-quality development of embedded systems. These are:

- Appropriate project planning, with an appropriate organisational structure,

- design measures, to reduce functional risk,

- procedures and measures to minimise random hardware failures, and

- procedures and measures to minimise systematic software and hardware errors.

With regard to software development, the following procedures and measures are now indisputably necessary:

- Establishing a development and verification strategy early on,

- ensuring good and regular communication between all project members,

- functional specification of the software,

- architecture and design,

- static and dynamic testing at different levels,

- traceability between all essential artefacts,

- configuration and change management, and

- proactive quality assurance.

The dilemma of specifications

On the one hand, the realisation is gaining ground that requirements specification, architecture and tests are indispensable components of systematic software development. Only by applying a development process that includes these elements can software errors be reduced to a minimum.

On the other hand, we know that neither requirements engineering nor test engineering is perfect. Natural language requirements specifications are never 100% clear, correct and complete. It is the same with tests. The possible combinations of input signals for industrially relevant systems tend towards infinity in practice. Also, 100% complete tests can only be derived from natural language requirements specifications for trivial cases. Today, only the architecture of a software can be created by appropriate modelling tools in such a way that source code can be automatically generated from it. Such an architecture thus has a comprehensible criterion for completeness and unambiguity. But whether it is correct in the sense of the user of the software cannot be determined unambiguously. In order to be able to make this statement, one would need unambiguous, correct and complete requirements specifications, which are then completely linked to the architecture. Anyone who has ever established traceability between requirements and architecture knows how difficult this is. Here the problems start with the tooling. Ideally, requirements specifications are stored in databases and architectures in corresponding graphical tools. I don’t know of any tooling where the necessary data exchange for creating traceability between requirements specifications and architecture works smoothly.

Structural coverage measurement includes a unique completeness criterion

In aviation, it has been recognised for decades that the greatest weakness of natural language requirements and test cases, as explained above, is the completeness criterion. This realisation led to the introduction of structural coverage measurement. The idea is to create requirement-based tests and measure the test coverage in the background. If all requirements are fully tested and the test coverage measurement does not result in 100% complete test coverage (this is almost always the case), then the untested code must be analysed.

In principle, there can be four reasons for missing structural coverage:

- Requirements specifications are missing or incomplete.

- Test cases are missing.

- The programme code cannot be reached by a test, but is needed (e.g. defensive programming style).

- The programme code is not needed.

This is precisely the motivation with which test coverage has been measured in aviation for decades. However, this method has so far only been applicable at module test level, since the previous technology for measuring test coverage always involves a change in the runtime behaviour of the application (software instrumentation). For test levels where the runtime behaviour is relevant (integration and system tests), the previous method for test coverage measurement can therefore not be applied.

Since functional relationships are necessarily broken down during the necessary process of detailing the requirements specification for the design level, the test coverage measured at module test level cannot make a reliable statement about the completeness of the functional requirements specification. Therefore, there has long been a desire in aviation to be able to use test coverage measurement in integration testing or even system testing. Here, the original motivation of structural test coverage measurement could unfold its full positive effect.

Non-intrusive measurement of structural coverage

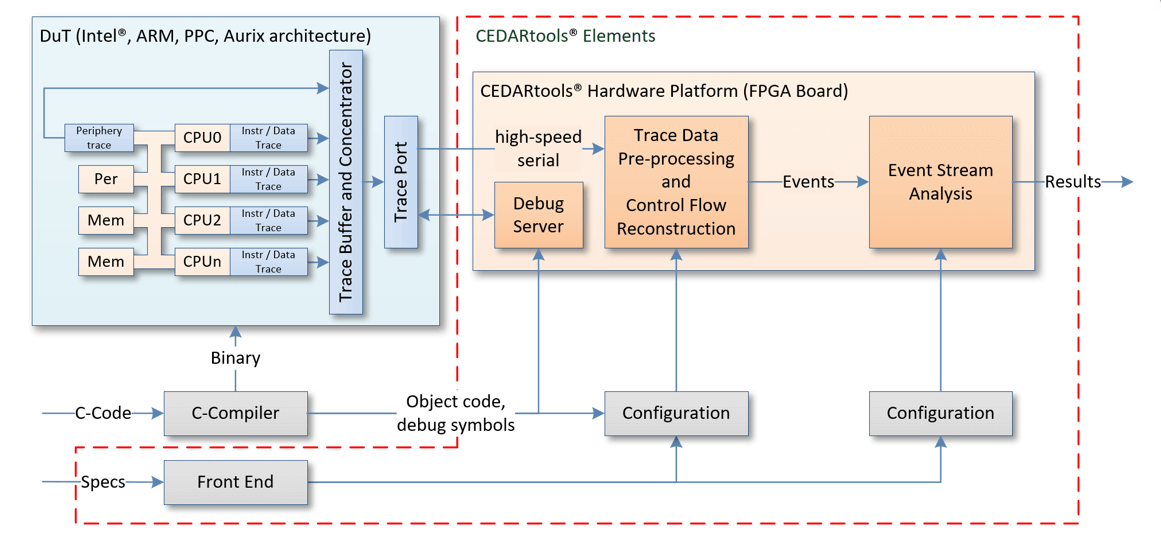

Almost all modern processors have an “embedded trace” interface. Using this interface, the programme processing in a processor can be observed without influencing the behaviour of the processor. This generates huge amounts of data (many Gbits/second).

Integration and system tests often extend over a long period of time, so it is very advantageous to be able to process the trace data stream in real time.

With CEDARtools®, Accemic Technologies offers a solution for precisely this real-time processing of trace data. This means that the trace interface can be used to measure the structural test coverage for an unlimited period of time.

Since the runtime behaviour of the application on the processor is not influenced in any way, this new technology now also enables the measurement of test coverage for integration tests and system tests.

Initial results from the application of this technology also confirm the theoretical assumptions that structural coverage of 80% and more is achieved in integration testing when all requirements are fully tested.

This confirms that measuring structural test coverage during the execution of the requirements-based, functional black-box tests is an effective means of detecting gaps in the requirements specification and the associated tests.

Non-intrusive technology for proving data and control flow

The non-intrusive observability of a processor opens up further testing possibilities. This also makes it possible to check the contents of software parameters of internal interfaces and thus, for the first time, to verify the data and control flow analysis required in all relevant functional safety standards by means of dynamic tests.

Since such tests can be fully automated compared to testing with a debugger, it becomes possible to systematically prove data and control flows in the integration and system tests. It is precisely in these tests that the fulfilment of functional requirements is also proven. This technology thus offers the possibility to also check the completeness of the requirements specification. Important data and control flows are always reflected in the system’s functionalities.

Data and control flows are essentially specified in the system and software architecture. But data and control flows are also mapped in functional, textual requirements specifications. This will also make traceability between functional requirements specifications and the architecture much easier in the future.

Conclusion

Textual requirements specifications and the graphical architecture are the two methods to successfully specify complex systems. A major challenge of textual requirements specifications is checking their completeness. The measurement of structural test coverage, on the other hand, represents a clearly measurable completeness criterion.

With the new technology of non-intrusive measurement of structural test coverage, the completeness of requirements specifications can be checked by dynamic system and integration tests. The classical measurement tools for checking structural test coverage can only be used with module tests. Module tests, on the other hand, cannot be used to verify the fulfilment of functional requirements.

Since the non-intrusive technology also enables the dynamic verification of data and control flows, it is now possible to verify the completeness of requirements related to the data and control flows.

Non-intrusive technology now enables the practical implementation of methods that were recognised as necessary many years ago. This is especially true for the development of safety-critical systems.

But data that is now available through the continuous, non-intrusive observation method can also be used for all other areas. The quality of tests will benefit enormously from this.

Notes:

HEICON and Accemic Technologies will be happy to answer any further questions you may have on the subject. Simply send an email to info@heicon-ulm.de.

Martin Heininger

Dipl.-Ing. (FH) Martin Heininger is founder and managing director of HEICON – Global Engineering GmbH, a consulting company for efficient and effective system and software development processes and methods. He has more than 20 years of experience in functional safety for embedded systems in the aviation, automotive, automation and railway industries. Improving the practical applicability of requirements and test engineering methods is his passion.