Artificial intelligence (AI) for everyone?

We want to simplify our lives with the help of technology. IT has been supporting our business and our everyday lives for years and is becoming increasingly pervasive. With its help, we can even do things that we know little or nothing about, such as my tax return. IT is seemingly expanding our range of skills and appears to be a reliable partner.

With artificial intelligence (AI), we are taking the next, somehow logical step: AI expands our scope of action and decision-making. It can process much more information and much faster than humans, it has access to knowledge available worldwide and can even learn. It is not surprising that AI seems to us to be something we have long wished for. With its capabilities, it can do things for us that are overwhelming or simply no fun; school essays are just one very trivial but often cited example, marketing texts another – both are the subject of controversial debate.

In the professional environment in particular, AI promises a remedy for the essential problems of our time: the shortage of skilled labour, bureaucratically overloaded processes and even decision-making, which is becoming increasingly difficult as complexity increases. But aren’t we actually “just” creating a solution for problems we have created ourselves? And – to paraphrase Einstein – with the help of a solution that is based on a similar way of thinking to the one that created our problems in the first place? After all, the clear advantage of this would be that we could (for the time being) carry on as usual, a thoroughly appealing idea for our brain, which is designed to save energy.

Artificial intelligence – not so new and yet still new

The use of AI and the discussion surrounding it is, of course, nothing new. AI research and application already existed as a separate discipline in the Department of Computer Science at TU Berlin when I was a student in the 1980s. And even when I was a child, there were critical voices from experts: Joseph Weizenbaum, for example, warned of the consequences of AI on humans after his experiences with a psycho-software called Eliza. As a result of these and other experiences, the subject area “Computers and Society” was consequently introduced at the TU Berlin in the 1980s. Of course, AI systems have not disappeared as a result; they have been further developed and now support medical professionals with diagnoses, for example. A thoroughly valuable achievement.

A new quality is currently emerging from the fact that AI is now obviously being marketed and accepted as a product suitable for the masses. And companies are pursuing the idea of using AI across the board where there is a lack of human expertise, either because there is a shortage of skilled labour or because humans simply do not have the information memory of AI and its processing capabilities. Our minds assume that more knowledge means more certainty, an age-old belief of industrial reality1.

Vivid environment

It is a trivial fact that our environment is quite vivid and changes every second (actually even faster). This creates new impulses and new opportunities2. In our smart homes and on our smartphones, we no longer even notice much of this because the technology around us creates a completely new atmosphere in which the so-called weak signals3 that alert us to such developments are increasingly difficult to perceive. We no longer train the necessary skills for such perception, and the constant sensory overload makes it virtually impossible to perceive them in everyday life.

It seems no coincidence to me that AI is moving into the focus of the general public at a time that, in our eyes, is characterised by events that were not to be expected. Corona, wars and climate change are frightening because we obviously no longer have everything under control. Thanks to its increased knowledge, its ability to predict probable scenarios and its scope for shaping the future, AI is becoming a source of hope that we can still control our increasingly fast-paced and complex world with its help. More data, more speed and cognitive intelligence should help us to finally “get a grip” on uncertainty. And as is so often the case when we tilt the pendulum in one direction, a strong countermovement visibly emerges. In the case of AI, it is already very evident in the form of fake news and other deceptions, in other words anything but certainty.

This is unpleasant, but can probably still be dealt with using additional technology – and this is exactly what is being discussed and implemented. More problematic and also more fraught with risk is the hope of control and mastery of the increasingly complex and fast-paced world we live in, which is deeply associated with AI. Despite all the hope, however, it is often overlooked that technology itself is a source of uncertainty4. With its increasing complexity and ability to learn, AI will take this to a new level.

AI from the perspective of uncertainty research

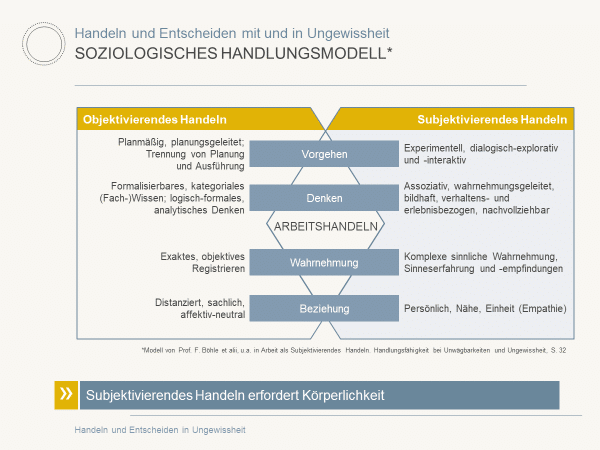

In the hope of using AI to at least better control uncertainty and to have more certainty and make the “right” decisions through increased knowledge and decision-making speed, it is worth taking a look at science and the competences published there for dealing with uncertainty. At this point, I would like to briefly illustrate these competences graphically using the action model by Prof. Fritz Böhle et alii, so that you can understand the following considerations more easily.

AI is strong on the left-hand side of the model (objectifying action), i.e. in rational cognition. Measurability, logical analyses, specialist knowledge and affective neutrality are a great strength of technical systems, and ultimately they are even created for this purpose.

Sociological action model by Prof. F. Boehle et alii

The situation is much worse on the right-hand side (subjective action), whose importance in uncertainty is essential: Intuition and intuition form the part of our intelligence that is responsible for subjective action – not a cognitive intelligence, but an embodied intelligence that is definitely also emotionally charged. But what does this right-hand side mean for the intelligence of AI?

AI and subjectivising action

AI can of course support our perception, but more data and information does not necessarily lead to improved intuition – in fact, the opposite is the case5. And how much more difficult will it be to let go of the necessary changes6, if AI then also provides us with all the knowledge that we have long since suppressed, often for good reasons?

And can you imagine an AI whose hair “stands on end” when looking at a company’s annual planning or who “shivers” at the sight of the new logo? Such embodied reactions are typical examples of subjectivising perception.

Of course, AI can now also communicate eloquently with us, using speech and even facial expressions. But is this really closeness and empathy (subjectivising relationship level) or is it not rather a rational tactical masterpiece, a cognitive empathy as also described in narcissism?

And yes, (generating) AI can support our creativity by suggesting procedures or recognising patterns and generating images (subjectivising levels of thought and procedure), but living creativity is more than that, it requires our embodied senses, our emotionality, our intuition.

With these aspects in mind, the use of the term “intelligence” in AI should at least be questioned.

Is AI at least suitable for the masses?

In order to assess the quality of the results delivered by AI, you need the view of experts who have experience in the specialised topic and with AI. Due to their experience and in-depth knowledge, experts have this repeatedly mentioned instinct for helpful AI offers. And experts recognise fakes, precisely because they somehow sense “something is wrong” and investigate. Laypeople, however, are unable to assess the quality and tend to trust the AI because “it knows so much and communicates so well with me” – I do the same with the tax return I mentioned at the beginning, because I have no idea and therefore no alternative.

There are good reasons why in Startrek, which I like to cite as an example, the AI systems for engine control are consulted by the experts (Scotty, Geordi la Forge) in critical cases and not directly by Captain Kirk or Captain Picard. Thanks to their fundamental knowledge, the former have a feel for what might help and, above all, what might not. And we are already noticing that the results of AI are “better” if we ask the “right” questions or make enquiries; specialist expertise is more than just helpful.

If we apply this to modern, mass-produced AI systems, we lack this intuition if we are not experts in the subject area ourselves. And this doesn’t just apply to uncertainty; the evaluation of options for action requires expertise. If this is lacking, there is a risk of blindly following the AI’s suggestions – and this is the exact opposite of gaining control; instead, we are gradually handing over control to the AI.

The consequences of this are vividly illustrated by another science fiction series, Stargate. In episode 5, season 7 (The Power of Memory), humanoids inhabit an idyllic living environment created by a computer underneath a dome that seemingly protects them from the hostile environment. But the humans’ space becomes ever more constricted, the consequences of this increasing confinement are concealed by the computer, and the humans no longer even notice the contradictions and threatening inconsistencies. This episode is a wonderful metaphor for what happens when, due to a lack of competence and fear of the unknown (in this example, the seemingly hostile environment), we hand over control to technology without being competent ourselves. Our room for manoeuvre becomes ever narrower, our dissociation ever more perfect, for fear of facing reality.

Star Trek season 1 shows similar metaphors in which the apparent happiness of security comes at a high price in the form of increasing restrictions on human creativity and development.

AI and ethics

Another discussion about AI is the question of the necessary ethics of AI. AI systems capable of acting independently may have to weigh up the consequences of their actions. We can find inspiration for this in the science fiction I like to use as an illustration, namely in the cult series Raumpatrouille Orion. In the episode “Guardians of the Law”, small scraper robots take control of the humans looking after them because, in their view, the humans are violating the ethics they have been taught and hurting each other.

A real-life example is self-driving systems, which in extreme cases have to decide between protecting the driver versus protecting other road users or have to weigh up whether to run over a cat or a hedgehog. Humans have a kind of inner compass for this, which is socially learnt, but is also innate in some cases. In addition to the rational, reason-based aspects, bodily reactions and emotions also play an important role.

It takes more than just rules. Personally, I don’t want to hand my life over to a system that rationally decides between my life and that of another road user. Delegating such tasks to technology neither provides security nor is it responsible behaviour. And this question will not only arise in 10 years’ time, but already today when it comes to automated driving in road traffic.

The AI Data

The story of the AI Data in Star Trek Next Generation is a lesson in the hurdles an AI has to overcome in order to develop human intelligence. Starting with a purely cognitive intelligence and equipped with a body, Data has to address ethical questions, develop emotions and empathy and learn artistic creativity. And the most challenging task is the encounter and confrontation with his shadow, which appears in the form of his “evil” brother Lore and plunges Data into an inner conflict of his own.

Even from Data’s beginnings, our current AI developments are still a long way off, but they already have the ability to influence us in our humanoid development through their knowledge, their speed advantage and their communication (and thus apparent relationship) with us – and certainly in a direction that we neither expect nor want.

A summarised look at risks

I am a project manager. In the following, I will therefore take AI in project management as an example to summarise the risks I have already mentioned in a very concrete and structured way. I am following the old project manager rule: if we want to utilise the opportunities, we have to deal with the risks. The following aspects can of course also be applied to other professions beyond project management.

Quality

AI can relieve project people or perform tasks that would otherwise have “fallen victim to time” (popular IT example: testing). At the same time, we see in many places in professional environments that the time gained must lead to an increase in throughput instead of deeper immersion in the topic or more mindfulness at work. The hoped-for reduction in stress does not materialise, nor does the hoped-for improvement in the quality of results.

Project managers are experts in the implementation of projects and thus in acting and making decisions in projects. If AI takes over entire project management tasks, such as project planning, there is a risk that significant parts of the expertise for the specific project and the methodology used will be lost. However, these are the basis for competent, professional project management and the associated quality of results. And the expertise required to manage AI is also jeopardised, as the experts virtually do away with themselves.

The interfaces of the current AI generation already allow people without specialist expertise to work with it. This can easily give the impression that project management can be replaced by AI. In such a case, the necessary evaluation of the AI results is no longer necessary and the AI has free room for manoeuvre. Ultimately, the AI then controls projects and no longer the (project) person controls the AI.

Uncertainty competence

We need subjectivising competencies in order to manage projects through to major transformations with all their unexpectedness. Project people must have the necessary skills for this because, as outlined above, AI is poorly positioned in this area and will remain so for the foreseeable future.

- Project people gain experience by doing. Experiential knowledge is an important uncertainty competence, and one that experienced project managers have often developed unconsciously. I see a risk in using AI that less experience will be gained and therefore less (unconscious) uncertainty expertise will be built up. This jeopardises project success and transformations.

- In the disciplines of project management, the skills for dealing with uncertainty are still inadequately trained or not promoted at all, and at the same time we are introducing a new source of uncertainty with AI (according to ISF Munich, technology is a major source of uncertainty, which already had about the same level of uncertainty caused by humans in 20167). This also jeopardises project success and transformations.

The idea of increasing knowledge with the aim of mastering or even eliminating uncertainty in projects is also a recurring theme in project management. AI is a wonderful opportunity to continue along this path – but unfortunately, it is primarily a more-of-the-same of the rational, a continuation of the same old same old. And this has led us as a society into a situation that we have less and less “under control”, but continue to “pretend” we do.

Projectualisation of society8

In the foreseeable future, we will have to clarify the question of ethics through AI, especially in project management, which is helping to shape the future of our society. And AI will implement what we embed in it much more consistently than humans. I think the example of Triage or even Raumpatrouille Orion makes it clear that there are major risks here that could even rob us of the control of technology.

If we really lose control, then we have created a homunculus. We become the creator of an “intelligence” that goes its own way. Thinking about what this means is beyond the scope and content of a blog article.

Innovations in the form of technologies are not only utilised by people for their own benefit, they also repeatedly harm the well-being of people – sometimes intentionally, sometimes simply due to a lack of knowledge about the consequences of their use. AI has possibilities that were previously hardly conceivable. And the damage caused by the much simpler innovations of the last 150 years is already so great that we may not be able to repair it.

Conclusion

AI is an attractor and, when used appropriately, can provide us with valuable support and even bring us one or two new ideas. It can take work off our hands, help us make decisions and help us better manage the shortage of skilled labour. At the same time, it is not intelligent in the humanoid sense. But it can provide valuable support wherever rational aspects are involved.

For this to succeed, AI still belongs exclusively in the hands of experts. They must take measures to keep their expertise up to date, because AI is fast and has a huge store of knowledge. In the blink of an eye, an expert can become a layperson and lose the necessary control over the technology due to convenience, lack of insight or time pressure. In a harmless case, this can ruin the chances of using AI.

And this brings us full circle: AI is not suitable for the masses – except perhaps to make a profit. Its risks increase with careless use and its opportunities do not materialise. And perhaps we should also give it a different name in the interests of better expectation management.

Extra bonus

Here you will find 3 additional questions answered by Astrid Kuhlmey (please press the plus buttons):

How fair is it to accuse an AI of lacking intuition?

Astrid Kuhlmey: In my opinion, it’s not about reproach or fairness. The question is rather what AI is designed for, what it can and cannot do.

Most of us unconsciously associate the term intelligence with humanoid intelligence – just recently, a colleague referred to “AI as another colleague” in a LinkedIn comment. In the article, I try to make it clear that AI does not have humanoid intelligence and at the same time is significantly faster in the cognitive-rational part and can handle a lot of information better than a human.

Uncertainty research has worked out that this other part of our intelligence that a technology does not have (I’ll call it the intuitive part here) is essential for acting and making decisions. This is particularly evident in situations of uncertainty (see Böhle), but can also be observed in everyday life (see Gigerenzer).

In my observation, organisations often smile at the topic of “intuition”. This is part of the industrial culture of certainty that unfortunately still dominates thinking in our culture. It is therefore not surprising that we use the term intelligence to describe AI, compare it with colleagues or accuse it of something that is perhaps not fair. 😉

Where do you get the hope that the use of AI can be steered in a generally desirable direction through social discourse?

Astrid Kuhlmey: On the one hand, I am optimistic, because in the last century we as a human race have already faced similar and challenging issues to the current ones (Weizenbaum for AI, hole in the ozone layer for climate change). We have solved both. My hope is based on the scientifically substantiated assumption that human beings are capable of learning and sensing and that they grow in the face of challenges – even if (or precisely because) we first put the obstacles in our own way.

On the other hand, I am somewhat pessimistic, because we had completely different social, political and individual conditions with completely different values when it came to the aforementioned issues in the past. As I study history a lot, I see parallels with the late phases of other advanced civilisations.

I take a pragmatic view of the current situation with more technology and less genuine closeness to nature. Either we will get round the bend towards a culture that can distinguish between living life and technology and does not prioritise economic efficiency over liveliness, or we will not. It’s a bit like the attitude of the Enterprise crew in a crisis: We either overcome it or we don’t. And it doesn’t mean that the series will continue without us. I think that would be a shame and at the same time not so new historically.

What real new applications could there be for AI?

Astrid Kuhlmey: In the current situation, I’m not thinking about it. I am in favour of us being experts. But we are not experts in something completely new. I don’t think it’s safe to let a technology whose “intelligence” has narcissistic aspects think up something completely new without sufficiently discussing the potential side effects.

In the current situation, I recommend that we slow down our utilisation of technical possibilities so that we humans can keep up. As we know from climate change, the indigenous peoples were not wrong when they warned that some things in industrialisation were happening too quickly. And perhaps this is an error that “characterises” our Western culture; if so, then https://t2informatik.de/blog/welcher-fehler-zeichnet-sie-aus/.

Notes (partly in German):

[1] Prof. Fritz Boehle (2017), Subjektivierendes Handeln – Anstöße und Grundlagen, in Arbeit als Subjektivierendes Handeln, S. 3-32

[2] Expertise der GPM zum Umgang mit Ungewissheit in Projekten, 2016

[3] In uncertainty research, weak signals are diffuse signals for upcoming events that are perceived physically.

[4] What we can learn from starship Enterprise

[5] Vgl. hierzu Gerd Gigerenzer, Bauchentscheidungen, Bertelsmann 2007

[6] Letting go is the new way of planning

[7] Cf. the GPM expertise cited above

[8] For more on the relevance of projectification and the associated importance of project management in society, see Prof. Dr Reinhard Wagner (2021), Projektifizierung der Gesellschaft in Deutschland

If you like the article or would like to discuss it, please feel free to share it in your network.

Astrid Kuhlmey has published more articles in the t2informatik Blog, including

Astrid Kuhlmey

Computer scientist Astrid Kuhlmey has more than 30 years of experience in project and line management in pharmaceutical IT. She has been working as a systemic consultant for 7 years and advises companies and individuals in necessary change processes. Sustainability as well as social and economic change and development are close to her heart. Together with a colleague, she has developed an approach that promotes competencies to act and decide in situations of uncertainty and complexity.