Distributed autonomous microservices with event-driven architecture

Many organisations use microservices, but their use is making synchronised communication increasingly difficult. It is no longer possible to ensure that all systems are available at all times and that all changes are consistent at every point. And the close linking of services through synchronised communication can mean that the failure of one service simultaneously results in the failure of a number of other services. Typical resilience patterns such as circuit breakers, retries and service meshes in turn increase the complexity of the overall system and only partially solve the actual problem.

In this article, I would like to explain different types of architecture and asynchronous communication, discussing the advantages, disadvantages and challenges of each type.

Request-driven architecture

The classic microservice architecture, often referred to as the request-driven or request/response model, is based on direct requests between services, usually via RESTful APIs. In this model, one service sends a request to another and waits for the response before proceeding. This method is easy to understand as it follows a simple question-answer scheme similar to human dialogue: One service asks for information or requests an action, another responds.

This direct form of communication enables structured and sequential execution of business processes, which simplifies development and debugging. However, it also leads to a close coupling between the services. This means that the services are heavily dependent on each other: If a service fails or is delayed, this can have a direct impact on the performance and availability of the overall system. Another problem is scalability, as the complexity of management and monitoring increases exponentially with the number of requests and services.

Furthermore, with this approach, changes to the interface (API) often require both the requesting and responding service to be updated, which increases the maintenance effort. Furthermore, failures can lead to chain reactions in which the failure of a single service triggers an avalanche of problems for other services. This model requires high availability and reliability of each individual microservice, which is difficult to achieve in practice, especially in complex systems with many services.

Event-driven architecture

The event-driven model is an architectural strategy that aims to simplify and improve the interaction between microservices by promoting loose coupling and asynchronous communication. It can be thought of as a large network in which each node (service) acts independently and only responds to certain signals or “events” that flow through the system.

At the centre of this system is the publish-subscribe model: a service (the producer) publishes an event, e.g. the update of a data set, without knowing or caring who receives this event. Other services (the consumers) that are interested in this specific event have subscribed in advance to be informed about it and can then react accordingly, be it by updating data, triggering further processes or other actions.

This decoupling enables the services to work and scale independently of each other. If a service fails, this has no direct impact on the functionality of other services as long as the event messages are handled correctly. This increases the reliability and availability of the overall system. As the services communicate asynchronously, they can continue their tasks without having to wait for an immediate response, which increases efficiency and reduces bottlenecks.

Another advantage of the event-driven architecture is its scalability. As the services are not directly dependent on each other, they can be scaled independently of each other according to demand. This enables more efficient resource utilisation and better adaptation to load distribution.

However, this flexibility also brings challenges, particularly in the area of state management and ensuring consistency across distributed transactions. Without a central orchestrator, it can be more difficult to manage complex business processes and ensure that all parts of the system remain consistent. Despite these challenges, event-driven architecture provides a robust foundation for developing scalable and robust microservice systems by taking advantage of decoupling and asynchronous communication.

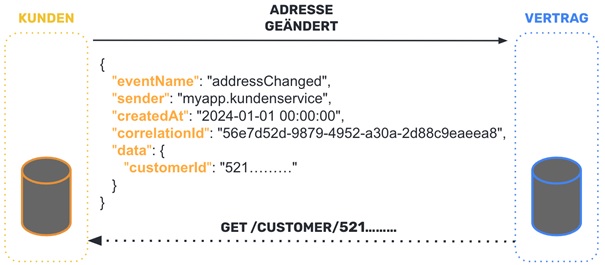

Event notification

In event-driven architectures, the event notification pattern plays a central role in communication between the components. Events are used to inform loosely coupled system parts about important events. A key aspect of this pattern is that the notification events are generally simple in structure and only contain basic information about the event that has occurred. This simplicity means that versioning of the event messages is rarely necessary and the system components can be kept consistent.

A critical point that requires additional consideration is the situation where a consumer needs additional data from the producer to process an event. This dependency leads to a direct coupling between the two, as the consumer may need to send a request to the producer to obtain the additional information needed. This has two major implications for the system design:

1. availability of the producer

After the publication of an event, the producer must be available to handle possible requests from consumers. This contradicts the ideal of loose coupling, as the consumer’s ability to react to the event depends directly on the producer’s availability.

2. performance and scalability

The need for the consumer to request additional data from the producer can affect the overall performance and scalability of the system. Each request causes additional network traffic and can lead to bottlenecks under high load. In addition, the latency of event processing increases as the consumer has to wait for the producer’s response before it can fully process the event.

Taking the CAP theorem into account, it becomes clear that the decision to ensure a high level of consistency within the system by means of direct requests from consumers to producers can impair availability and performance. Especially in scenarios where there is a need to ensure high availability, these direct dependencies can be problematic.

A possible solution could be the use of techniques such as caching or the implementation of a more comprehensive event enrichment process before the event is published. This would allow the required data to already be included in the event notification, reducing the need for direct requests from the consumer to the producer. This would help to maintain loose coupling, increase availability and improve performance in distributed systems.

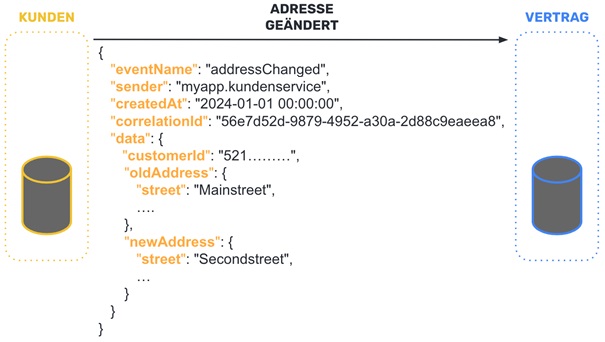

Event carried state transfer

Event carried state transfer in event-driven architectures is a scheme that aims to eliminate the need for consumers to request additional data from the producer after an event has been received. This is achieved by including all relevant data that a consumer might need in the event itself. This scheme promotes autonomy and decoupling between the components of a system by ensuring that consumers are able to execute their processing logic independently and without external dependencies.

Autonomy

As each event carries all the necessary information with it, consumers can act independently of producers. This reduces the dependencies between the services and improves the system’s fault tolerance.

Decoupling

Producers and consumers are less strongly coupled, as consumers do not have to return to the producer to obtain missing information. This facilitates the development and maintenance of system components.

Efficiency

The direct availability of all required data in the event reduces the need for additional network calls, which improves the overall performance and responsiveness of the system.

As the structure of the data transmitted in an event can change over time, events must be versioned to ensure that users are able to understand and correctly process different versions of the data. This increases the complexity of the system design and requires careful planning to avoid compatibility issues.

In the context of the CAP theorem, event-carried state transfer poses an interesting challenge. While this scheme improves availability and partition tolerance by reducing the need for direct communication between producers and consumers, it can pose a challenge for consistency.

The data transferred in the event may be out of date at the point of processing. This can lead to inconsistencies, especially in fast-paced environments where data is frequently updated.

Event-Carried State Transfer provides a powerful method for promoting autonomy and decoupling in event-driven architectures. By embedding all necessary data directly into the event, consumers can operate independently of producers, improving the robustness and efficiency of the system. However, this pattern also brings challenges, especially in terms of event versioning and managing data freshness. Applying this pattern requires careful consideration of the pros and cons and careful planning to maximise the benefits and minimise the potential pitfalls. In the context of the CAP theorem, Event-Carried State Transfer allows for higher availability and partition tolerance at the expense of immediate consistency, while Eventual Consistency serves as a compromise solution to still ensure consistent system behaviour over time.

Event sourcing

Event sourcing is an architectural approach that aims to store all changes to the state of an application as a sequence of events. These events represent not only the changes that were made to the system, but also the context in which they took place, including the point in time and the cause. This creates a detailed chronicle of all processes within the application, making it possible to reconstruct the state of the system at any point in the past.

Immutability of events

Once events have been saved, they can no longer be changed or deleted. This ensures that the history of application states always remains traceable and consistent. Changes in the system are represented by the addition of new events.

State reconstruction

The current state of an application can be reconstructed from the beginning by sequentially processing all stored events. This makes it possible to reconstruct any state in the past and to analyse how certain decisions have affected the current state.

Audit and analysis capability

As all changes are recorded as events, Event Sourcing provides a natural audit trail function. This is particularly valuable for applications that need to fulfil strict compliance requirements or where full traceability is required.

Flexibility in the data view

Event Sourcing enables the creation of different views of the stored data. These views can be optimised to meet the requirements of different use cases without having to change the basic event storage. For example, summaries, aggregations or specific projections of the data state can be created to improve performance or support specific analysis requirements.

Future-proofing

Another advantage of event sourcing is the ability to apply new views or processing logic to events that have already been collected. This allows applications to be flexibly adapted to new requirements without having to change the underlying data structure. For example, new functions can be implemented based on data analyses or behaviour patterns that were not envisaged at the point of the original event collection.

Event sourcing offers many advantages, but also brings challenges. Managing a growing volume of event data can be demanding in terms of storage requirements and processing speed. In addition, implementing an event sourcing system requires careful planning, particularly with regard to event modelling and ensuring system performance.

Summary

The event-driven architecture is a flexible and robust foundation for the development of distributed, autonomous microservices, as it supports decoupling and asynchronous communication between services. The keys to success in such a system are an understanding of the functional requirements, as well as knowledge of various patterns and the associated trade-offs.

Implementing an event-driven architecture requires careful consideration of the pros and cons, as well as adaptive planning to fully realise the potential and maintain system integrity. By applying these principles, developers can create powerful and future-proof microservice landscapes that meet both current and future requirements.

Notes:

If you are interested in serverless architectures, please make an appointment with Florian Lenz. He will be happy to support you as a strategic partner from consulting and analysis to conceptualisation and architecture through to operational implementation.

If you like this article or would like to discuss it, please feel free to share it in your network.

Florian Lenz has published another article in the t2informatik blog:

Florian Lenz

Florian Lenz is the founder of neocentric GmbH, a certified Microsoft trainer and conference speaker. He has a great deal of expertise in Azure, organises meetups and runs a YouTube channel on Azure Serverless. He founded neocentric with the aim of strengthening companies in their efficiency, cost reduction and competitiveness. His specialisation in serverless solutions enables him to turn innovative ideas into leading products. He likes to do this in an authentic, down-to-earth and practical way.