How effective are AI-supported code reviews really?

A field report on code reviews with CodeRabbit, Greptile and Copilot

Code reviews are essential in software development. They help to find errors early on, maintain high code quality, adhere to team guidelines and share knowledge. As important as they are, code reviews take time, can block other work and are not always completely objective. In stressful phases, they are sometimes only carried out superficially.

At the same time, AI has developed rapidly in recent years. Language models can now ‘understand’ and generate not only text but also complex code. It is therefore hardly surprising that they are now also being used in the field of code reviews. There are now a whole range of tools that analyse pull requests and provide automatic feedback. Sounds practical, but how does it feel in practice?

A selection of AI tools and a project for testing

The selection of AI tools is already huge today, and it is not that easy to decide on a provider. My choice falls on CodeRabbit [1], Greptile [2] and GitHub Copilot [3]. While CodeRabbit and Greptile specialise in code reviews, Copilot covers other aspects of programming.

A small full-stack project is used for testing: an expenses tracker where users can register and record expenses by category. Technically, it consists of an Express API (TypeScript, Zod, Prisma, PostgreSQL) and a Next.js frontend. This keeps the project manageable, but at the same time provides enough complexity to really challenge the tools.

All tools run with their default settings on the same code base. Identical pull requests are created for each tool so that the results remain comparable.

It is important to me to note that this is not a classic comparison test with score tables or ‘winners’. I want to find out how well AI reviews currently work, where their limits lie, and whether there are fundamental differences between the tools.

To challenge the tools more, small errors, architectural changes and missing adjustments between the frontend and backend are deliberately included in the pull requests. This shows how reliably the tools detect problems, whether they provide meaningful suggestions for improvement and whether they also consider the code in its overall context.

In addition, there is a public repository for each tool with two pull requests each. The changes are identical, allowing the results to be compared directly and providing a differentiated picture of the individual applications.

Observations during code reviews

Once the foundations have been laid and all services are running under the same conditions, the more exciting part begins: How do the reviews actually feel? What kind of feedback do I receive, how reliably are errors detected, and where are the differences?

Setting up the services is straightforward. GitHub Copilot is ready to use right away, as the review function is integrated into GitHub. For CodeRabbit and Greptile, all you need to do is link to the repository, which can be done with just a few clicks.

In practical use, AI-supported reviews provide quick and immediate feedback and can therefore be used without long waiting times. What is striking here is that

- Many of the deliberately built-in errors are detected, but not all of them.

- In addition to the error messages, comments on improving code quality appear, which are often helpful, but occasionally superfluous or inaccurate.

- In some cases, the tools detect connections between the front end and back end, even if changes only take place in one area.

- Comments on the general code structure are rather rare.

- There are no completely inappropriate or grossly incorrect suggestions.

- The automatically generated summaries of the changed code locations make it easier to get an overview, but sometimes contain minor inaccuracies.

- The number of comments varies significantly depending on the tool.

To make the results more tangible, I would like to highlight a few specific situations from the tests:

An incorrect API call in the front end, whose URL does not match the back end, is reliably detected. The tools also flag a missing ‘await’ and the absence of a check to deactivate a button. This notification prevents unnecessary API calls. All services also point out that the auth middleware is missing in a newly added route and that no suitable response is sent. This is a clear and critical error.

However, the tools do not consistently detect other problems. Only Greptile reports an unused backend query in the frontend. An error in the Expenses query filter remains hidden from GitHub Copilot. Conversely, only Copilot finds, albeit only on the second attempt, that not all filters are reset when the reset button is pressed.

Unused code is also not consistently detected. Only CodeRabbit detects a superfluous import, but this is hidden in the so-called ‘nitpick’ comments. It is also striking that none of the services address the inconsistent naming of files. As a result, it goes unnoticed that the newly created file ‘categories.controller.ts’ does not fit into the existing naming scheme.

The additional notes on details such as the use of the Date object in the front end, improved error handling or more precise typing are useful. These comments provide valuable food for thought. At the same time, however, there are also less meaningful comments with no apparent added value, such as when Copilot recommends replacing a simple comparison with a ternary operator.

The automatically generated summaries from the providers work well overall and help you get up to speed with the code more quickly. Nevertheless, errors do creep in here too. For example, CodeRabbit and Copilot incorrectly interpret a moved property as a new addition.

Special features of CodeRabbit, Greptile and GitHub Copilot

In addition to the general impressions, it is worth taking a closer look at the specific features of each service. Each tool has its own focus in the review process and handles comments, summaries and additional information differently.

CodeRabbit

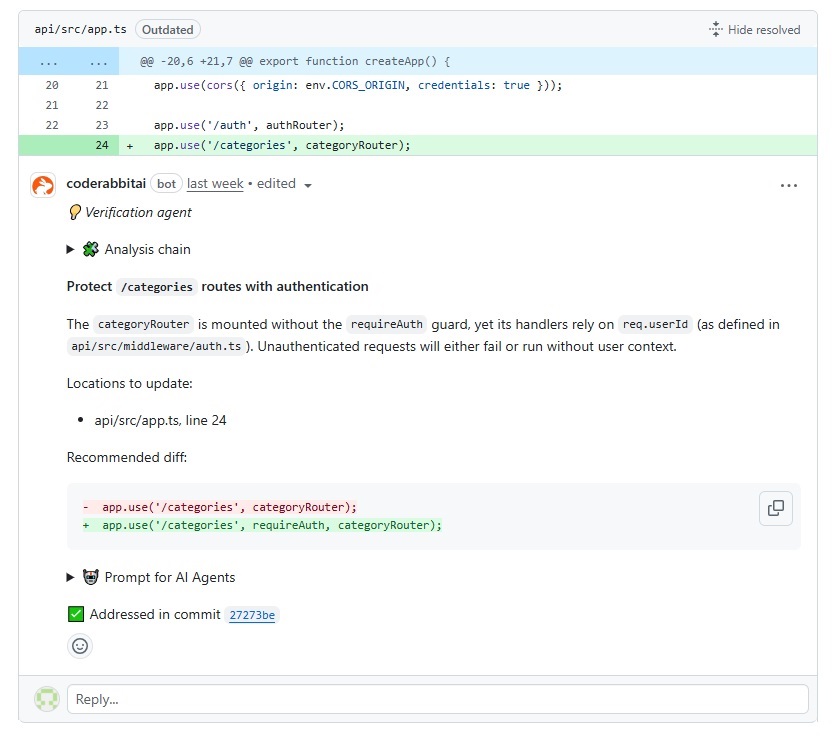

CodeRabbit provides a comparatively large number of comments, including so-called ‘nitpick’ notes. These contain minor comments that only become visible when expanded. The idea is that details should not be distracting, but should be available when needed. The concept makes sense in principle, but in practice I had the impression that some points ended up there that were important enough not to be ‘hidden’. This creates the risk of overlooking relevant information.

In addition, CodeRabbit expands the PR description with a short summary. There is also a detailed walkthrough, supplemented by a sequence diagram. The comments themselves are mostly detailed and often contain small code snippets, which makes the feedback easy to understand.

Figure 1: Example CodeRabbit comment

Greptile

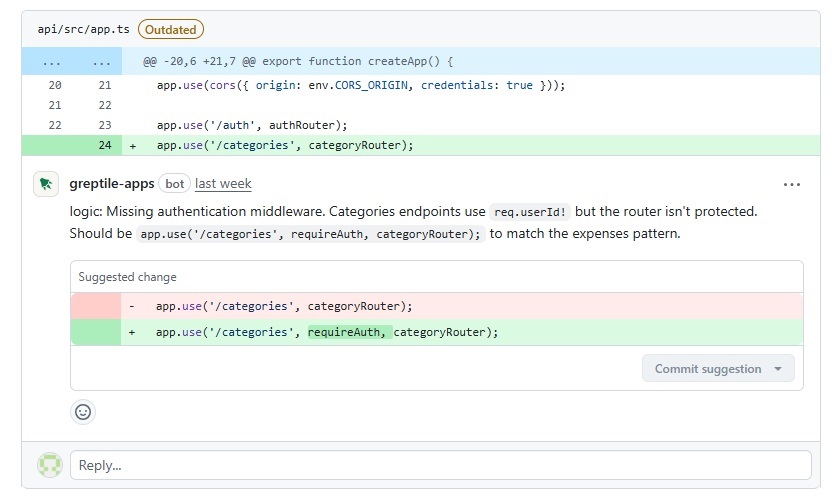

Greptile supplements every code review with a clear summary that helps you quickly get an overview. Another interesting feature is the confidence score, which provides an assessment of how confidently the pull request can be merged. If there are still problems, these are briefly mentioned. This is a practical approach. However, there were instances where comments only appeared in this summary and not as separate comments. This creates the risk that such points may be overlooked or difficult to understand.

The number of comments was lower overall with Greptile than with CodeRabbit. They were usually more compact in their wording, but in many cases still sufficiently clear.

Figure 2: Example Greptile comment

GitHub Copilot

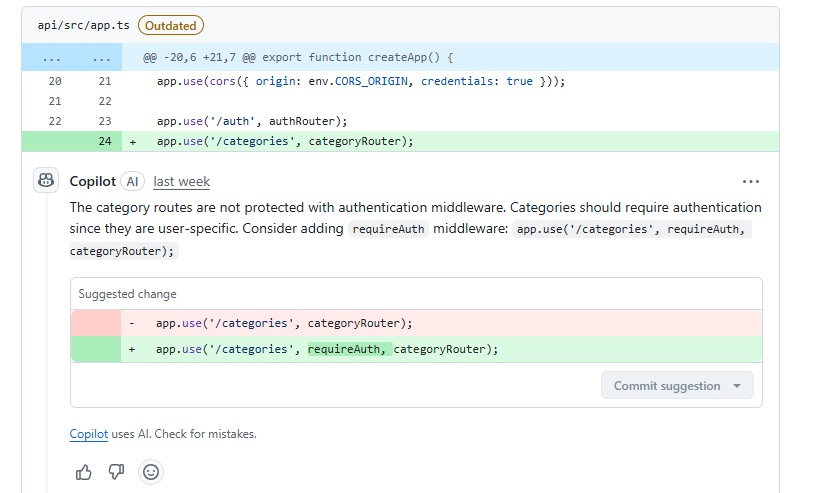

GitHub Copilot stands out as the most unobtrusive service. The PR summary is concise and, in many cases, sufficient to provide a quick overview. The number of comments is also significantly lower than with the other two services, and the notes are shorter.

However, one striking feature became apparent: after code changes and restarting the review, new comments often appeared that did not relate to the current changes but should actually have appeared earlier. As a result, important notes were sometimes only visible on the second or third run. This behaviour quickly makes the reviews incomplete and may cause relevant notes to be omitted altogether.

Figure 3: Example GitHub Copilot comment

A separate look at data protection

Anyone who wants to use an AI tool for code reviews should definitely familiarise themselves with the information on data protection and security measures in advance. The following points provide an initial overview of CodeRabbit [4], Greptile [5] and GitHub Copilot [6].

CodeRabbit

- Code is processed exclusively for reviews in an isolated environment and is not stored permanently.

- LLM providers only receive code diffs and contextual information.

- Proprietary code is not used in the training of language models.

- SOC 2 Type II certified (external audit of security and data protection measures)

Greptile

- Source code is deleted after the review is complete.

- Vector embeddings from file paths, documentation or AI-generated comments may be stored.

- Proprietary code is not used to train language models.

- SOC 2 Type II certified (external audit of security and data protection measures).

- Self-hosting possible, operation in own infrastructure.

GitHub Copilot

- Proprietary code is not used without consent for training publicly accessible models.

- In the settings, you can specify whether data such as suggestions or prompts may be used for model improvements.

- Copilot Business and Copilot Enterprise are SOC 2 Type II and ISO/IEC 27001 certified, among other things.

Even though the three providers clearly state their measures, it is advisable to always check the current data protection guidelines in detail before using the tools in real projects.

Conclusion

My conclusion on code reviews with AI tools is mixed. On the one hand, they cannot replace human reviews. Errors, unpredictabilities or inconsistencies occur too frequently, and they are also unable to view a project as a whole in the same depth as experienced developers can. On the other hand, the tools reliably detect many errors and provide suggestions for improvement that complement pull requests in a meaningful way.

They can save time, especially as an initial check before a team member reviews the code. They are also valuable for solo developers, as they provide information on code quality and are a helpful support, especially when working with new technologies.

Another aspect is the question of price. If the tools were available free of charge, I would definitely recommend them, as they have proven to be a useful addition to everyday work. However, in the case of paid offerings, it is worth carefully considering whether the benefits justify the price. In any case, a reliable assessment is only possible through long-term use in realistic projects.

The issue of data protection remains equally important. Especially for companies or teams with sensitive code bases, it is advisable to take a close look at the providers’ guidelines. These should always be checked in detail and compared with your own requirements.

I don’t want to name a clear winner. Different code bases or project types can lead to different results. My first impression was that CodeRabbit and Greptile, as specialised review tools, seemed somewhat more reliable and helpful. At the same time, GitHub Copilot scores points with a low barrier to entry, as the review function is already included in the existing membership. For many, this is likely to be the easiest way to gain initial experience with AI code reviews.

Notes:

[1] CodeRabbit

[2] Greptile

[3] GitHub Copilot

[4] Trust Center and FAQ CodeRabitt

[5] Greptile Security

[6] Manage Policies and Trust Center GitHub Copilot

Here you will find an article about the development of an iOS app with generative AI.

And here is an article about unit testing with AI.

Are you an opinion leader who likes to discuss this topic? Then feel free to share this article with your network.

Christoph Bohne

Christoph Bohne works as a software developer at t2informatik.

As a career changer, he discovered on his unusual path into IT how important curiosity and openness to new things are. He enjoys learning and trying out new things. His best ideas often come to him while walking his dog.

In the t2informatik Blog, we publish articles for people in organisations. For these people, we develop and modernise software. Pragmatic. ✔️ Personal. ✔️ Professional. ✔️ Click here to find out more.