A maturity model for agile requirements engineering

In addition to the role holders in the development teams, there is also a small unit with “central” REs. These are responsible for networking the job role holders and maintaining the community, for the qualification concept of the REs and the professionalisation of the discipline. After the establishment of a uniform training (based on the CPRE of the IREB1 ) and a two-year term, it was of course interesting to find out how the knowledge imparted is received in the teams and the daily work of the REs. In order to recognise potential for improvement in the qualification and in the daily work and to be able to derive meaningful measures, the survey of the maturity level is a recognised method. For example, there is the Kreitzberg model for surveying the UX maturity level of a company. So it should also be possible to find a suitable model for determining the maturity level for agile requirements engineering.

The method of maturity models

Our decision was preceded by a critical and differentiated examination of the method of maturity models. A characteristic of traditional models is that one automatically achieves a perfect result by simply following a set process. However, this statement can only be made in a stable environment, because it contradicts the complex and volatile environments in which one usually finds oneself with software development. A look at the guiding principles of the agile manifesto, which downgrades processes and following a plan in favour of reacting to change, supports these observations. In addition, the known models usually also assume “finished” artefacts and check the fulfilment of certain quality criteria (e.g. completeness). In agile approaches, however, one learns and improves primarily with “unfinished”, just-in-time results (keyword: MVP).

Maturity models are often used to acquire and improve certain skills step by step. The stage concept necessarily includes a highest stage, the achievement of which suggests the illusory certainty that one has reached an optimum. This way of thinking also contradicts the values and principles that form the basis for mastering complex adaptive tasks. The decisive factor for us was not the maturity level of processes, but the ability of an organisation to cope well with requirements.2

After some research, it was clear that there was no adequate model that met both our demand for transparency and the environments in which the discipline of requirements engineering is practised at DATEV. Initiated by our central unit and with the company-wide involvement of some colleagues from the large RE community, we therefore set out to create our own maturity model for agile requirements engineering.

The focus was less on evaluating the personal work of individual employees, but rather on assessing the maturity of the discipline of requirements engineering in the often cross-functional development teams.

The structure of the maturity model for agile RE

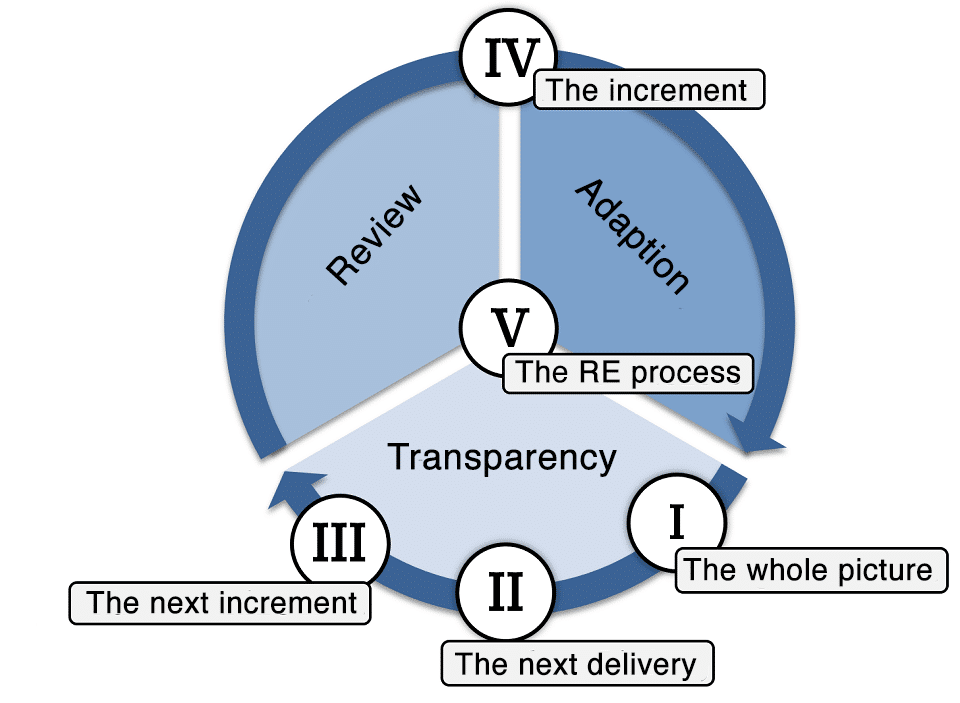

The basis for our considerations was Scrum, probably the best-known framework for agile software development. We also drew on its basis, namely the theory of empirical process control3, for our model. The theory describes that verifiable results are produced at regular intervals. Results are compared with the expectations of the product and it is checked whether the requirements to be fulfilled correspond to those that have been implemented. Future results are directly influenced by necessary adjustments. The three pillars of transparency, review and adjustment thus form the basic categories of our model.

- Keeping the big picture in mind. A product vision is the North Star for development. It addresses customer needs and sets the direction. It provides the motivation to pull together as a team to strive for a challenging goal.

- Planning the next delivery may involve several sprints/iterations. In software development, this is also referred to as release planning. It is important that details of the requirements continue to be largely dispensed with.

- In increment planning, the details of the requirements are specified, such as the acceptance criteria of user stories. Requirements can also become more detailed during implementation if new insights are gained.

- The review and adaptation for the increment, the development object, takes place.

- The lived process of requirements engineering is also regularly reviewed as such and adapted if necessary.

We deposited the model structure as an example with a large number of artefacts, events and rules and supplemented them with best practices.

Preparation of the survey

In the end, we decided to conduct an anonymous survey of the role holders in the form of a written questionnaire.

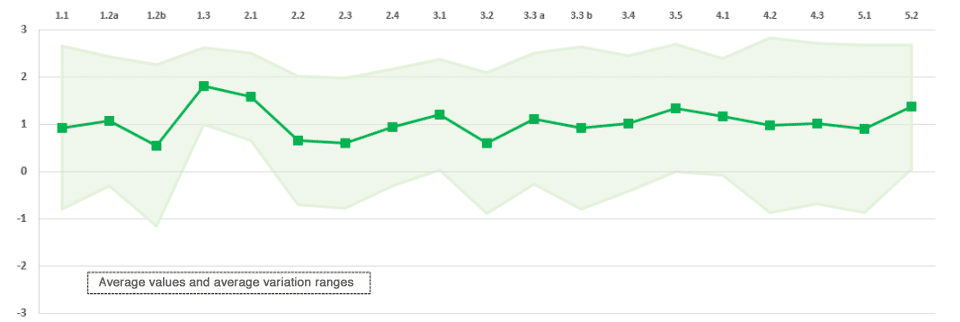

The structure of the questionnaire was based on the structure of our maturity model. For the content of the questions, we used our collection of samples. Analogous to the five evaluation levels, the participants were presented with five thematically related blocks with a total of 19 questions. These included assessments of artefacts such as the product vision, methods for requirements elicitation and prioritisation or events such as the team retrospective (all 19 questions can be found in detail in the German questionnaire5).

The questions were answered or evaluated on a scale from +3.0 (“agree completely”) to -3.0 (“disagree completely”). Free text answers were not possible due to data protection regulations. The gradation already mentioned and familiar from other models is not possible and not necessary for this model.

Collection and evaluation of results

We report regularly on the progress and development of the model. As a kick-off for the survey, we initiated additional community meetings to present the questionnaire, in addition to sending information by e-mail to about 280 role holders. The most important message was that the assessment should not be based on one’s own activities, but rather on the handling of requirements engineering in the entire development team. After a processing time of four weeks, we finally had a response rate of 25%.

- Why is there such a wide spread in some of the assessments?

- What are the possible causes?

- How can these impediments be eliminated?

The results were aimed in two directions. On the one hand, there were helpful recommendations for the improvement and further development of the questionnaire. On the other hand, we received valuable impulses to derive concrete fields of action and possible measures. As a result, a document was created from the consolidated work results, which, in addition to current challenges and associated potential solutions, also provides established procedures and best practices for the fields of action. This already serves the teams today as a possibility to improve their handling of requirements engineering. At the same time, the document is a guideline for the central support units on which topic areas further improvements can be achieved.

Conclusion

Some of the recognised challenges or impediments have already been resolved in the course of the last year. For example, in order to conduct more regular and intensive customer engagement, a separate User Researcher role has been introduced. The need for more effective review of results is being met with an expanded range of support services from the central quality unit. Additional tools for better cooperation between the roles involved were developed and made available. Furthermore, the questionnaire itself could be improved – in future, the context in which the evaluation and assessment is to be placed will be asked for:

- What is the development procedure?

- In which technology is the product located?

- In which phase of the product life cycle is the product?

The dynamics of change do not stop at DATEV. Among other things, additional roles (e.g. business analyst) have been defined whose tasks are also located in the discipline of requirements engineering. So if we want to get a statement about the maturity of the discipline, we have to expand the circle of addressees. This gives rise to further questions that need to be clarified before the next survey:

- For a meaningful determination of the maturity of the entire discipline of requirements engineering, should not all those involved in the RE process be surveyed?

- Should individual assessments of the role owners continue to be obtained or would it be better to directly survey the teams in their entirety?

- How can a regular survey be established, which above all also benefits the further development of the discipline in the teams? (Can the Scrum Master be the right catcher or the team retrospective a useful format?)

This maturity model arose from an internal need at DATEV. However, we deliberately chose a meta-level that would enable many other companies to adapt the model for themselves. It would be interesting to know whether there are other challenges or impulses besides the questions already raised. Feel free to contact us.

Notes (some in German):

[1] CPRE as a globally recognised certification of the International Requirements Engineering Board

[2] Optimieren von Requirements Management & Engineering, Colin Hood, Rupert Wiebel, Springer Verlag 2005

[3] empirische Prozesssteuerung als Kernprinzip von Scrum

[4] PDCA Cycle

[5] Fragebogen zur Bewertung des Reifegrads im agilen Requirements Engineering

The Project Management Institute also defines a maturity model with OPM3. It addresses “organisational project management” with a collection of best practices, concepts and methods.

Veit Joseph has published another article in the t2informatik Blog:

Veit Joseph

Veit Joseph started at DATEV eG in a central unit as a requirements engineer. His focus there is on the training and qualification of requirements engineers in cross-functional development teams and the general professionalisation of the discipline. For some time now, he has been working in central quality management with the mission: “Customer satisfaction through product quality”. ISO25010, test engineering as a separate discipline and also whole-team quality are keywords for his activities.

Renate Boeck

Renate Boeck has been with DATEV eG for over 30 years and has worked in the field of software engineering, system analysis and requirements engineering from the very beginning. In addition, as “sponsor” of the Requirements Engineering role, she is responsible for the professionalisation and qualification of requirements engineers and the organisation of the community. Her tasks thus also include the company’s internal training programme for requirements engineers and the strengthening of cooperation between the agile roles.